In the previous sections, we explained the motivation behind choosing the concept of ‘troubling times’ as the observed object for this research. We also explained how new knowledge could be produced by projecting ‘light’ onto the object through deep learning and that this knowledge might, in some cases, be intuitive rather than factual. We touched on the bias issue that naturally occurs when creating a training dataset. In this section, we will focus on the remaining ethical concerns and technological issues involved in gathering large amounts of photographic data, as well as describe the process of training the TroublingGAN model.

For the training dataset, we chose news photography from 2020. News photography offers a selection of quality images that often focus on events we could call ‘troubling’. We decided against handpicking and curating the images ourselves, as this would be a tremendously time-consuming process and could introduce researcher bias. Instead, we chose to use the automated process of scraping certain websites to source large amounts of news photography, avoiding projecting our preferences in the process. This way, we enacted the process by which the training datasets currently in use were collected — usually image databases that are not ideal for the training process but are good enough to continue the experiment. It might be tempting to work with highly curated datasets while using our own trained StyleGAN in an art project, but the decision to stay with the trouble (Haraway 2016) opens up the possibility to peek into the biased dataset issue from another angle.

The scraping needed to have some logic, and the code had to define the URLs of each website from which images would be scraped. This again meant handpicking the websites. To avoid that, we found a source that makes it possible to generate a string of URLs. After analysing various news websites and agencies, we had a winner — Reuters. The Reuters website has a ‘Photos of the week’ section, which contains the most impressive images from the news, in highly aesthetic quality. It has a stable URL, starting with ‘https://www.reuters.com/news/picture/photos-of-the-week’, followed by an ID that makes it easy to collect it by date. This way, we were able to generate a string of URLs for the whole year and supply it with the scraper code. The scraper code automatically opens a website and extracts data from it. Our scraper saved all images from the opened websites and subsequently created a tabular summary of the collected data. This means that we were able to collect valuable information for every image: its src, title, caption, week number, precise date, and the URL of the photo gallery in which it featured. This process gave us more than a thousand images, which is not many (compared with the ten thousand images StyleGAN officially requires), but it was enough to train a decent working StyleGAN model. No further selection was applied except for the removal of duplicate images. Hence, the dataset genuinely represents Reuter’s photos of the week throughout 2020.

The scraped images range from photos of natural disasters and social unrest to political crises and the ongoing pandemic. Most of these images precisely depict troubling events and situations. There were some neutral images, too, such as a detail of a bird or a photo of a full moon, but not many (approx. one per 300 images). We decided to keep these neutral images in the dataset so that it could be analysed as a whole. It must be noted that the dataset is not comprehensive, and many ‘unseen’ societal problems were thus excluded using this method. It points to the significance of the equal visual representation of all underprivileged groups of people and issues that are hard to visualise. We, therefore, admit that our dataset is not objective, pointing again to the troubling nature of image datasets.

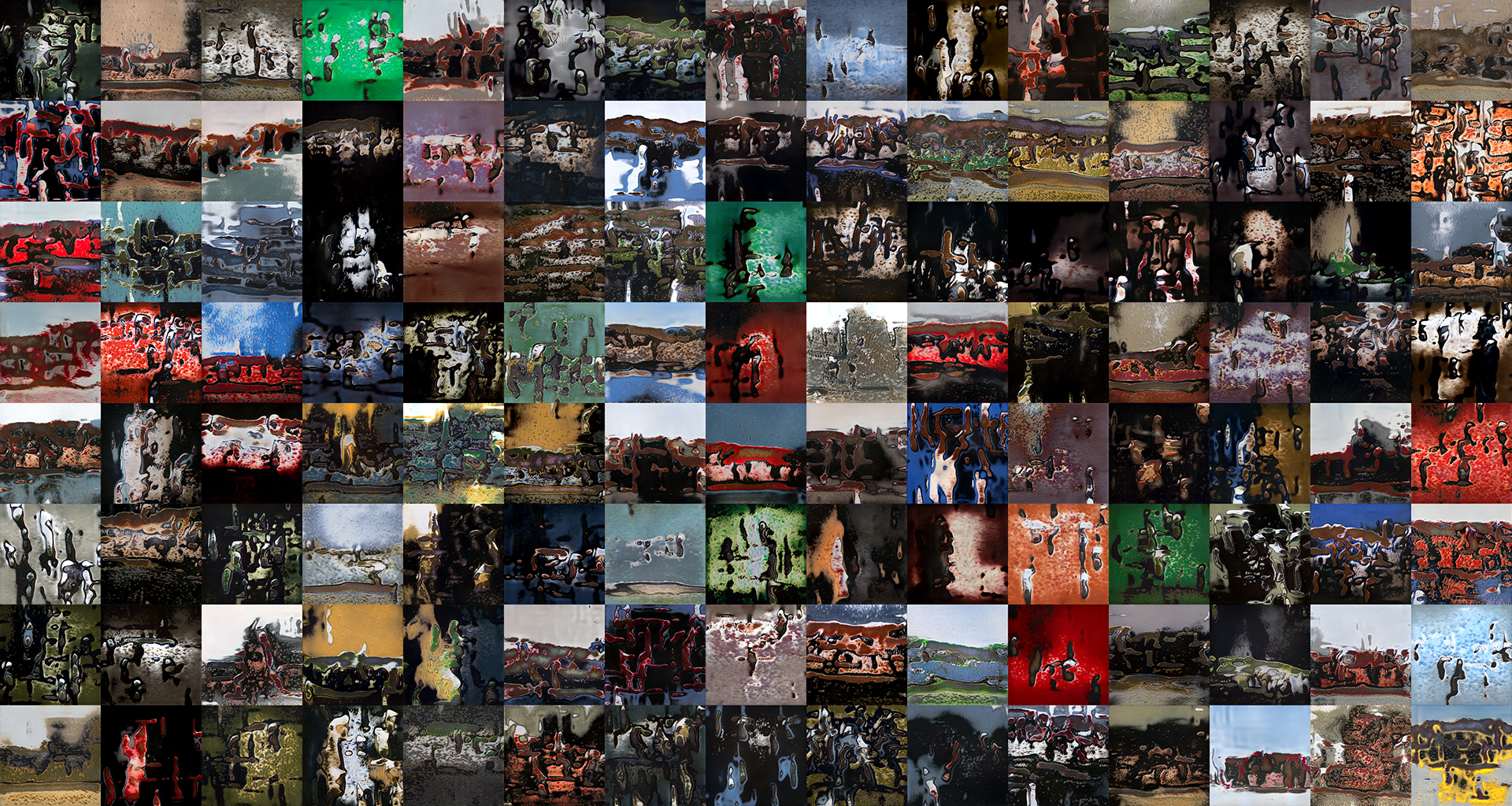

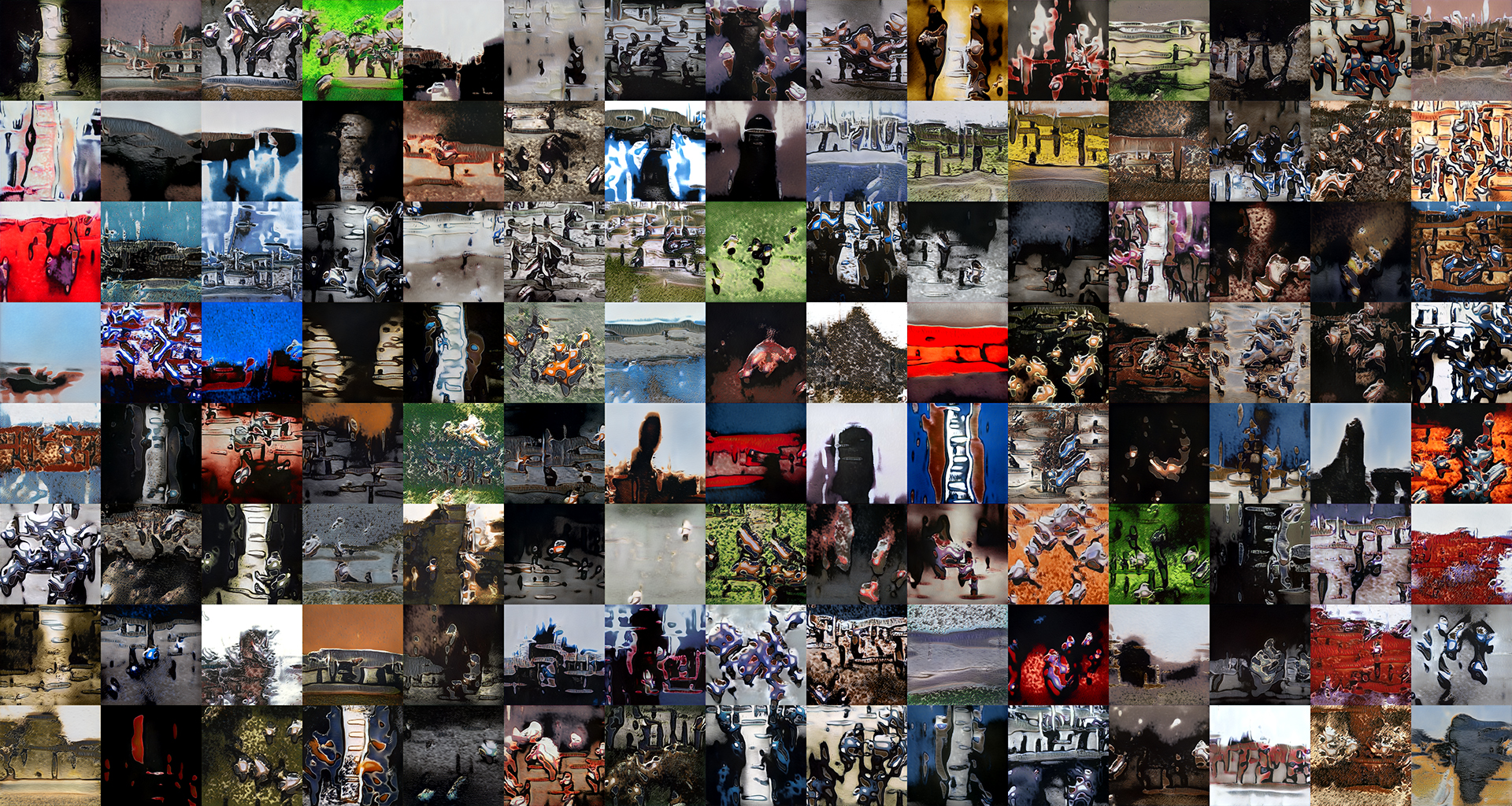

Visually, this dataset is a combination of very different scenes, from portraits to landscapes, dynamic photographs vs static still-lifes, photographs with natural light vs night photography, etc. Thus, the visual content is very diverse and does not comply with the requirements of a proper training dataset for a generative neural network. However, grounding the training on such a collection of images triggers curiosity about possible outcomes. Can a generative neural network detect similarities in such varying imagery and learn to generate new outputs based on what it sees? Is it able to grasp the essence of the ‘troubling times’ in these photographs and translate their affective quality into completely novel images?

There exist StyleGAN models that have been trained on heterogeneous datasets; for instance, the StyleGAN trained on examples of abstract art (Gonsalves 2021). But in such a case, the original inputs were already abstract compared with our experiment, where the inputs are photographs depicting specific scenes and objects. It could be expected that the generated outcomes of our StyleGAN model would be visually ambiguous, although we couldn’t say in advance to what extent and which details would be preserved.

For training, we used an official implementation of ‘A Style-Based Generator Architecture for Generative Adversarial Networks’ by NVIDIA called StyleGAN2 (Karras and others 2020a). This improved version of the StyleGAN was state-of-the-art in generating synthetic imagery at the time we did the experiment. Since then, another, even more improved version, StyleGAN3 (Karras and others 2021),has been released. However, it seems most of the improvements were, in fact, generations without artefacts, so they are not very important in terms of our project.

Our working setup included the Quadro M6000 graphics card from NVIDIA containing 12GB of GPU memory. Since training in full resolution of 1024x1024 pixels requires around 14GB of GPU memory, we opted for a resolution of 512x512 pixels. This setting not only affords enough usable detail in the resulting imagery while decreasing the amount of required memory to around 10GB, but also cuts the time required for training in half.

This factor was very important when training on ‘consumer’ tier graphics cards because it took us a full 14 days (more precisely, 343 hours) to reach one million iterations. In comparison, NVIDIA papers operate with 25 million iterations trained on several (usually 8) graphics cards from the ‘professional’ tier, which are each around six times more expensive than our dated card. Since our goal was to achieve abstract imagery rather than photorealistic quality, even using half-a-million iterations would have been feasible.

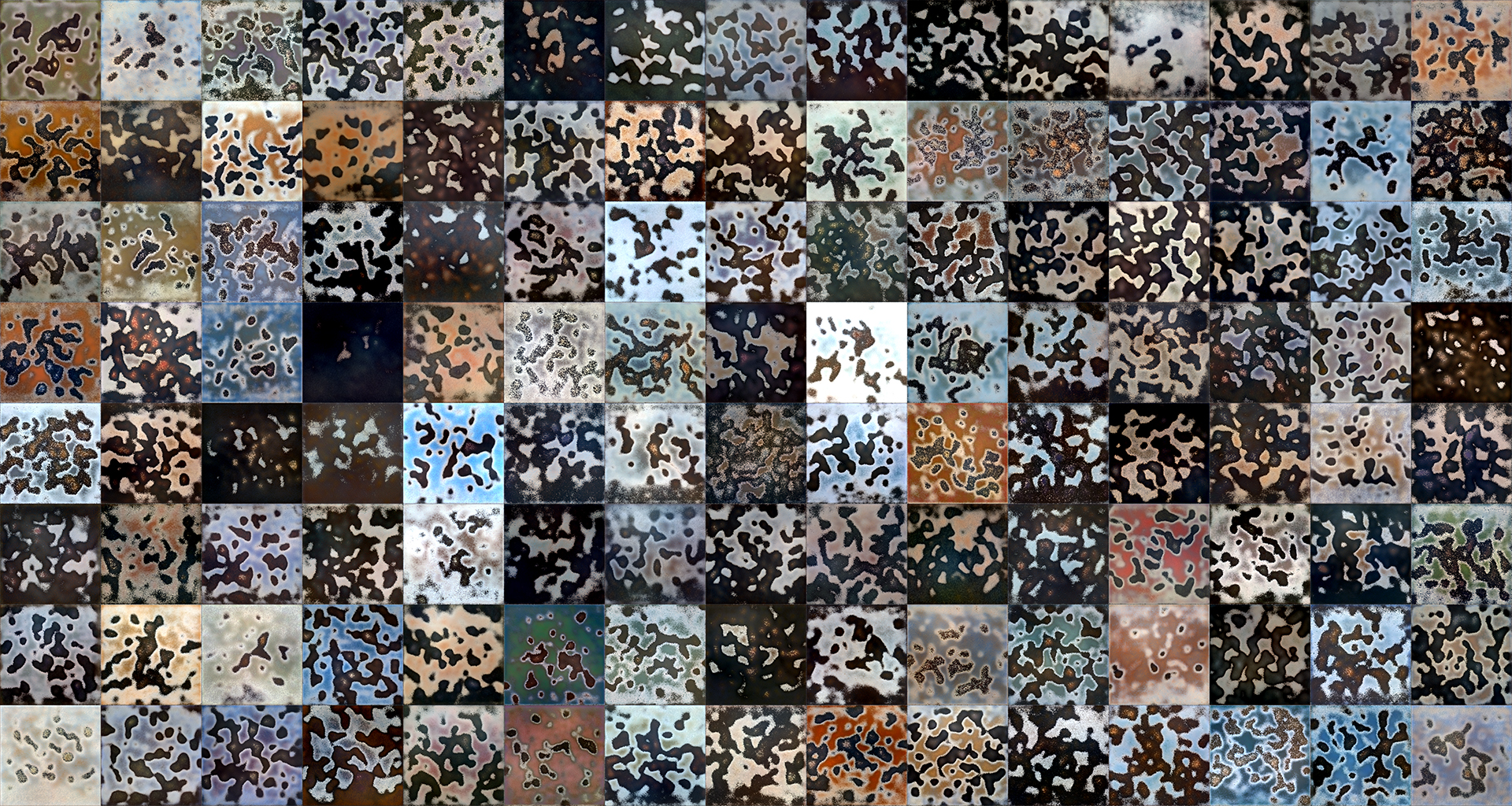

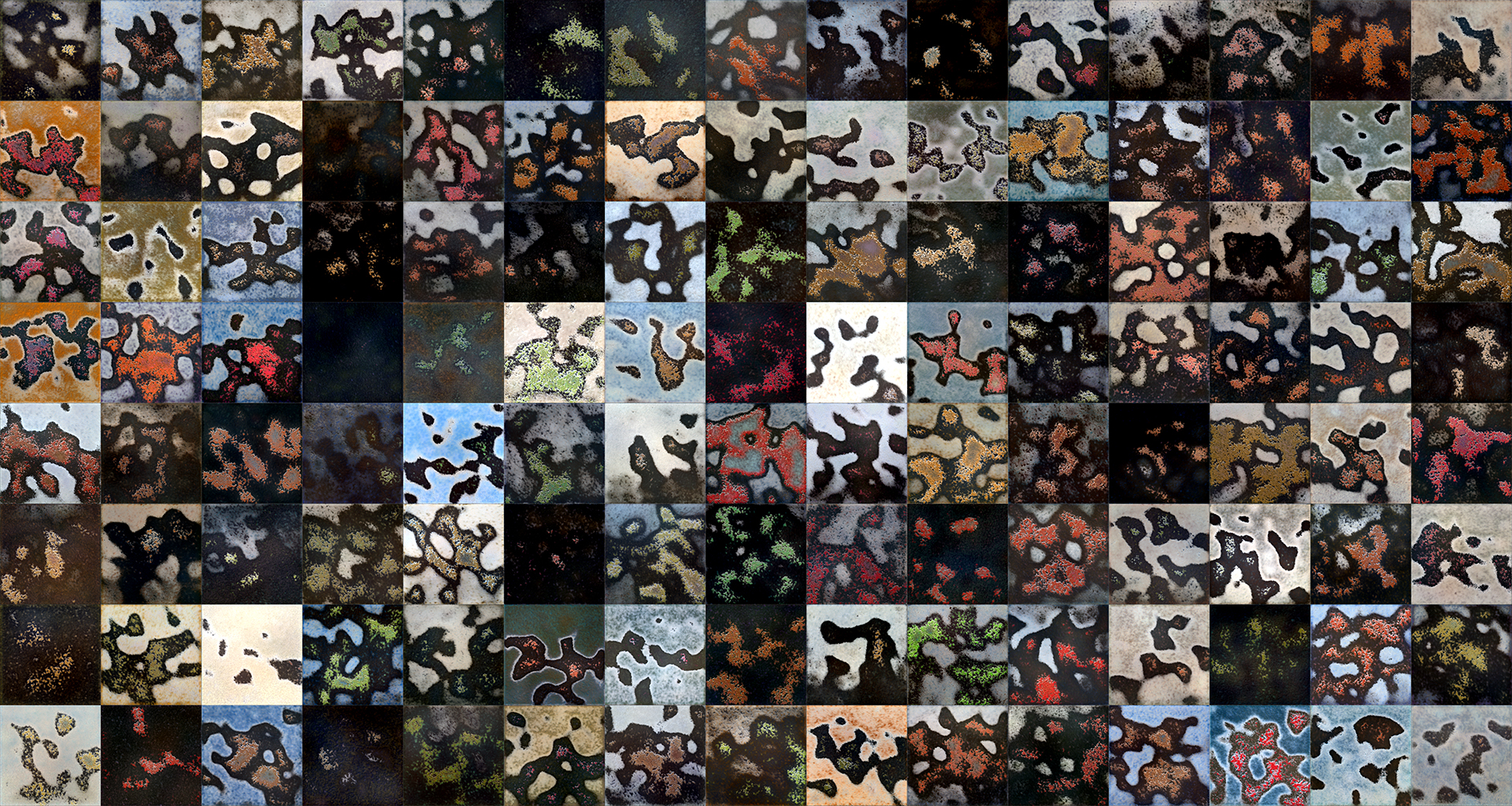

Luckily, there is a method called Adaptive Discriminator Augmentation (ADA) (Karras and others 2020b) that can be used in combination with StyleGAN2. This method uses arbitrary flips and rotations to augment the input dataset, producing much better results using fewer images. This method is not suitable for homogeneous datasets (e.g., aligned photos of faces) where homogenous results are expected, but since we were operating on a rather random dataset and were expecting abstract visuals, this method was ideal. In 206 hours (almost 9 days), we trained 0.6 million iterations using this method and were happy to see that this approach provided satisfactory results.

There is another approach to training a dataset other than owning a graphics card. Lots of Cloud providers, such as Google, Amazon, Azure, or Lambda Labs, rent state-of-the-art GPUs by the hour. For example, the hourly rate for an NVIDIA V100 graphics card is currently $1.50. Since the V100 GPU can process one million iterations at a resolution of 512x512 pixels in around 21 hours, the entirety of the training would cost about $32. Training one million iterations at full resolution (1024x1024 pixels) would take around 44 hours, or $66. At first sight, it seems a no-brainer to use a Cloud to train your own dataset, but the capacity of Cloud providers is often maxed out, and we were unable to get any GPU cards assigned to us (as they were already in use by other customers). The situation is most probably caused by the ongoing chip crisis, which will hopefully improve within the upcoming year.