The outcome of the full realm of our experimentation is a customised StyleGAN model we call TroublingGAN. Like any other StyleGAN, it is a statistical model, and it represents the results of the analysed input training data. Through the deep learning algorithm that serves as the instrument of observation, the visuals in the dataset were analysed, deconstructed using the neural networks’ own logic, and translated into numeric form, arranged by defined vectors for specific visual features. From this space, novel combinations of visual features and their values were synthesised into new images. We could control this synthesis to some extent, for example, by intensifying the visual ambiguity. It is important to understand such a model is a tool for future use, not a final outcome.

In image synthesis such as this, it is important to preserve novelty because there is a delicate balance between the well-trained and overtrained stage of a model. We didn’t want the model to generate images carrying specific features of those in the dataset (overtrained) or visuals that were too abstract (as is typical in the early stages of training). Part of the process is knowing when the model is ready and, therefore, when to stop the training. To make this decision, we referred to the Frechet Inception Distance [FID] metric (Heusel and others 2018) — when the learning curve flattens, and there is no further improvement; otherwise, an autonomous artistic decision can be made, similar to deciding when a work of art is finished.

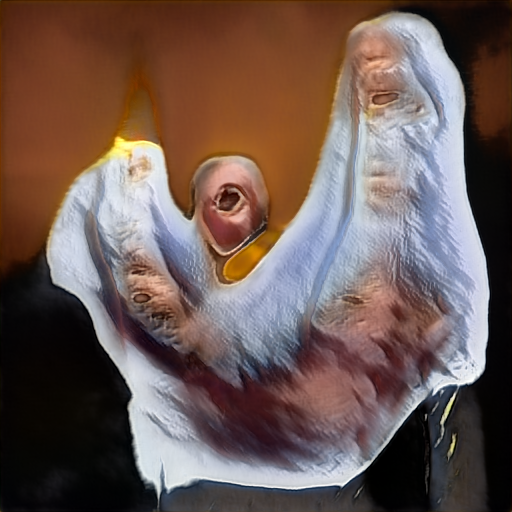

The generated outputs were, as expected, semi-abstract and exhibited the smudginess typical of neural aesthetics; this is due to the highly varied nature of the scenes in the training data. Despite that, we consider the current form of TroublingGAN finished, as the elements from the dataset images are not recognisable in the newly generated outputs, and yet the outcomes are not entirely abstract.

TroublingGAN outputs combine concrete and abstract elements, making the imagery visually indeterminate (Hertzmann 2020) and possessing typical GAN aesthetics — various blobs, smudges, and blurs contrast with areas of sharpness. The formal components in these images are caused by the technology used to make them: neural networks that synthesise the imagery and the training dataset. These two factors determine the aesthetics as well as the content. Visual ambiguity is determined by the dataset’s heterogeneity and its size. Furthermore, the dataset influences the tonality and the overall atmosphere.

The composition of the generated visuals is random. The generation process is combining randomly chosen values from vectors defining different image features like a roulette unless we choose to define the specific values beforehand. The images analysed in this paper are all coincidental, offering only a partial view of the model. We cannot say that by analysing a handful of images generated from the TroublingGAN model, we have obtained a representative sample. Does it make sense to analyse the images without analysing the model? Should we do the analysis, or should we use one of the computer vision tools? The more we think about it, the more uncertainty arises from trying to analyse the results. It’s a moment of losing control of one’s creation — what began as a human intention turns feral, leaving us standing outside its realm, just letting us peer through small holes in the fence. The following analysis, therefore, is only a glimpse; us humans are trying to approach the unknown.

Upon viewing at least fifty of the generated images, certain recurring elements begin to emerge. Some visuals contain elements that resemble human figures. Interestingly, these figures have a dark hole where the eyes and mouth would be, which acts as a disturbing element, invoking the paintings of Francis Bacon. This distinctive characteristic is likely due to the model’s exposure to human faces from multiple perspectives, ultimately resulting in a synthesised and homogenised ‘hole’.

The figures are often composed somewhat baroquely, but this is purely coincidental. To some extent, it could be explained through the dynamic compositions of the scenes from the dataset, which include many chaotic events involving many people. Despite the resulting coincidental composition, it is still possible to recognise its link with the dataset material, although not a specific connection. No one is directing the composition of the image or how it is viewed, or its effect. Yet, in looking at these generated visuals, we can learn something about the observed object — images from the dataset.

The colour palette is dim, with mixed shades of grey, brown, and blue, and less frequently, white and orange. These are directly influenced by the dataset: the whites and blues are derived from the recurring medical scenes, while the orange hues are remnants of fires and explosions.

The outcomes carry a combination of different textures. A recurrent Mondrian-like grid appears, as well as small patches of repeating detailed patterns resembling Photoshop stamp tool errors. There is a lot of fluidity: haunting blobs morph into violent smudges that morph into imperfect straight lines with remarkable brush-stroke quality. The close-ups of face-like blemishes and one-eyed figures are collaged onto abandoned landscapes or packed into claustrophobic dark interiors. Somehow, every generated piece exhibits the same sombre atmosphere, as if the various catastrophes and troubling moments in the dataset merged into one gloomy average that operates as a visual filter deforming the generated image.

The compelling visual ambiguity of TroublingGAN creates a fascinating tension between abstraction and representation. Analysing TroublingGAN as a generative model, it appears to have emancipated itself from the traditional constraints of image-making. In light of W.J.T. Mitchell’s concept of an image’s desire (2006), this could be perceived as the algorithm’s objective function or the intended outcome during the generative process. The generator within any StyleGAN model employs varying scales of neural connections to synthesise new images based on its understanding of the dataset. Its aim is to create samples that the discriminator cannot distinguish from the original data. In essence, the generator’s desire is to satisfy the discriminator. Yet, in the case of TroublingGAN, this desire remains perpetually unfulfilled.

Confronted with a heterogeneous dataset, TroublingGAN’s generator grapples with inconsistency. Despite varying scales, the discriminator continuously deems the samples as unconvincing. We terminated the training process in accordance with the FID metric (as discussed in section 5.1 of this paper), indicating that the generator’s attempts to satisfy the discriminator had ceased to improve over a significant period.

Hence, what are we observing? Could the images produced by TroublingGAN be interpreted as manifestations of an unfulfilled desire to replicate the quality of images within the dataset and, therefore, a failure? Or should we regard them in a contrasting light, as a triumph over the encoded need to satisfy?

Perhaps the neural network has genuinely transcended the task of image-making, opting instead for abstraction as a more fitting visual language to average the features within such a diverse dataset. Through this abstraction, viewers may engage with the images on a more emotive and intuitive level.

Arguably, the images produced by TroublingGAN may not result from deliberate abstraction but might instead emerge as the by-product of a lengthy and arduous process of degradation. They constitute a new category of ‘poor images’. Hito Steyerl defines the term in her essay In Defense of the Poor Image (2009), centring her discourse around images that undergo continual copying and degradation due to various digital processes online. However, the training of a StyleGAN model resembles this deconstructive process. For images to be generated, they must first be degenerated.

Much like ‘poor images’, the visually ambiguous outputs of TroublingGAN serve as echoes of their ‘former visual selves’. They lean ‘towards abstraction, a visual idea in its very becoming’ (Steyerl 2009). The nebulous something-ness we addressed in section 3 of this paper is essentially the spectral presence of these former visual selves. It signifies the average of the image collection — a shared visual idea of the dataset veiled behind the tangible details of each image.

To be able to perceive this something-ness, the semantic meaning must be erased from the image. We can intuitively project a meaning onto the images and guess what the ambiguous elements mean, but the imposed meanings are dynamic and constantly shifting. TroublingGAN images invite the viewer to engage in an interpretative process where meaning is not fixed but fluid and subjective. This fluidity highlights the ever-present stable atmosphere and emotional charge of all generated images.

Visual synthetic media, whether photorealistic or visually ambiguous, form a completely new category of visual material. They bring a great deal of spectacularity to the viewer. The existence of generative models (such as TroublingGAN) creates a feeling of indefiniteness in the synthetic visuals, as they are just one of many possible outputs that the model can produce. There is a certain thrill in anticipating what the neural network will generate the next time. Many generative models turn into a dopamine-releasing addiction, like pulling a casino slot machine handle and waiting for a new combination of symbols to appear. In her book AI Art, media theorist and artist Joanna Zylinska criticises GAN outputs, saying that people treat GANs as art, like a

[...] glorified version of Candy Crush that seductively maims our bodies and brains into submission and acquiescence. Art that draws on deep learning and big datasets to get computers to do something supposedly interesting with images, often ends up offering a mere psychedelic sea of squiggles, giggles and not very much in-between. It really is art as spectacle.(Zylinska 2020: 76)

Her critique suggests that GAN visuals ultimately have a ‘pacifying effect’, potentially leading to what philosopher Franco Berardi calls ‘neurototalitarianism’ (2017: 20). We question whether this effect is caused by the novelty of synthetic media and could therefore decrease over time, as the human eye becomes more used to it. More research needs to be done to fully understand the affective value of synthetic AI-generated visuals. Until then, we consider it important to engage critically and meaningfully with GAN models while remaining aware of the potential psychological effects the images can have.

Rather than concentrating solely on the generated outcomes, it may be more instructive to perceive TroublingGAN as a tool functioning on two distinct levels. Initially, the training process of TroublingGAN facilitated the generation of a new kind of understanding regarding the image dataset. Upon completion, TroublingGAN transformed into a tool for future applications, available as an open-source pre-trained model via HuggingFace — a popular AI open-source community website. This site hosts community-trained AI models and provides a user-friendly web interface for demonstrations. Users can generate images from TroublinGAN and manipulate the ‘sigma’ value. This parameter determines the extent of deviation from the envisaged central point during the generation process.

As we have noted, comprehending the entirety of the TroublingGAN model is beyond our capabilities. This unattainability must be acknowledged as we adopt a fresh perspective on AI-generated images. Although these images can exist independently in our world, their true existence lies within the model’s latent space, sharing this domain with their parallel selves. Looking at a single generated sample from this latent space only heightens the anxiety of the unknown, inciting curiosity about the appearance of the remaining images. Yet, contextualising these images as merely a collection also falls short of fulfilling our understanding.

Drawing parallels with W.J.T. Mitchell’s exploration of the life of images in our society (2006), it can be argued that StyleGAN images lead their life within the latent space. Circumstances may change, training could be enhanced, and the model might be fine-tuned, among other variables. Hence, the released TroublingGAN model does not present a definitive response to the question of how a neural network would interpret the provided dataset. Instead, it acts as a snapshot in time, representing one among many potential trajectories that the training could follow.

Throughout the project, we executed the training process multiple times. The initial final version was trained as detailed in section 4. In 2022, we refined the training process by augmenting the dataset with an additional year’s worth of Reuter’s ‘Photos of the Week’ collections and opting for landscape compositions instead of the square format. Both models are available for comparative purposes.