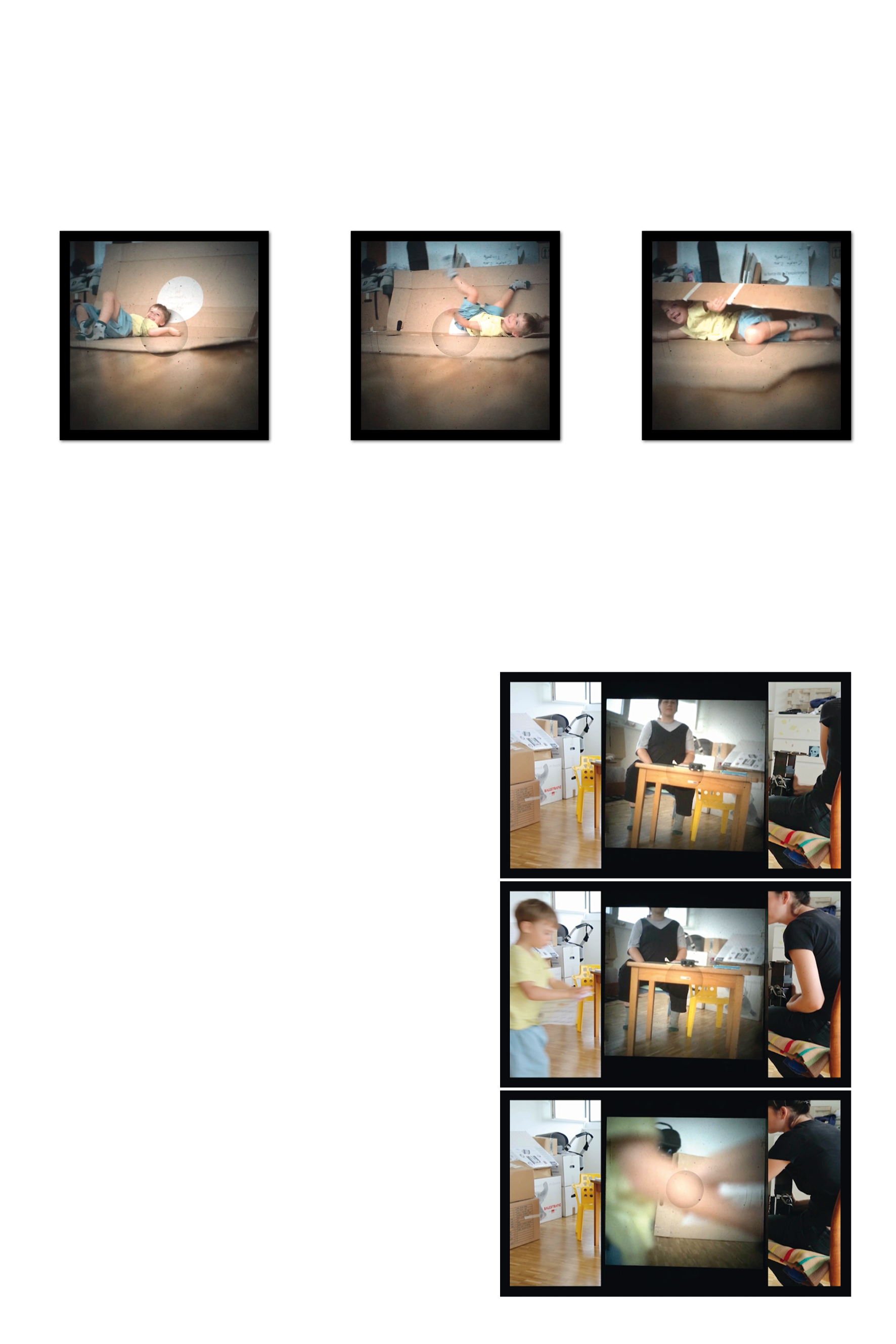

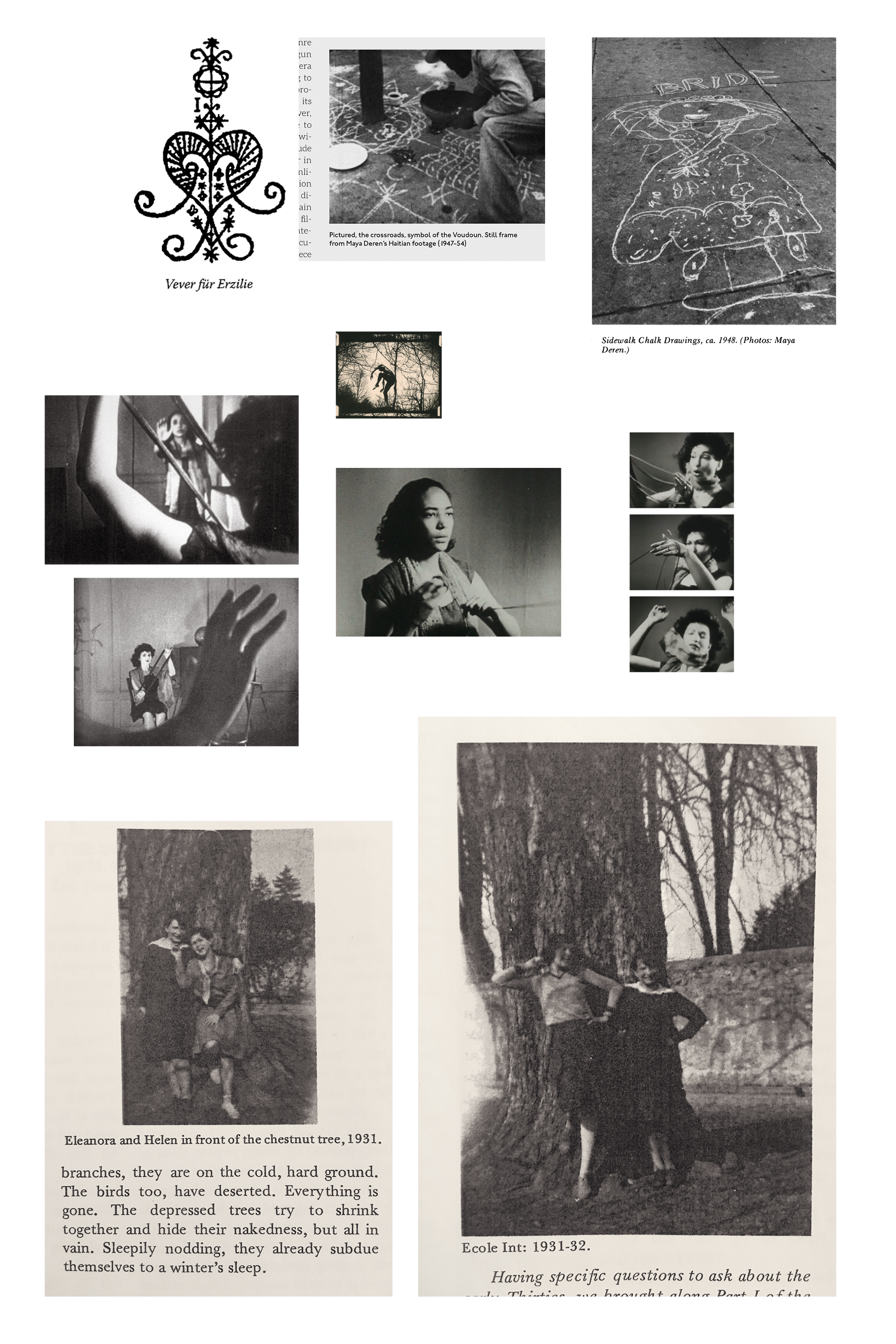

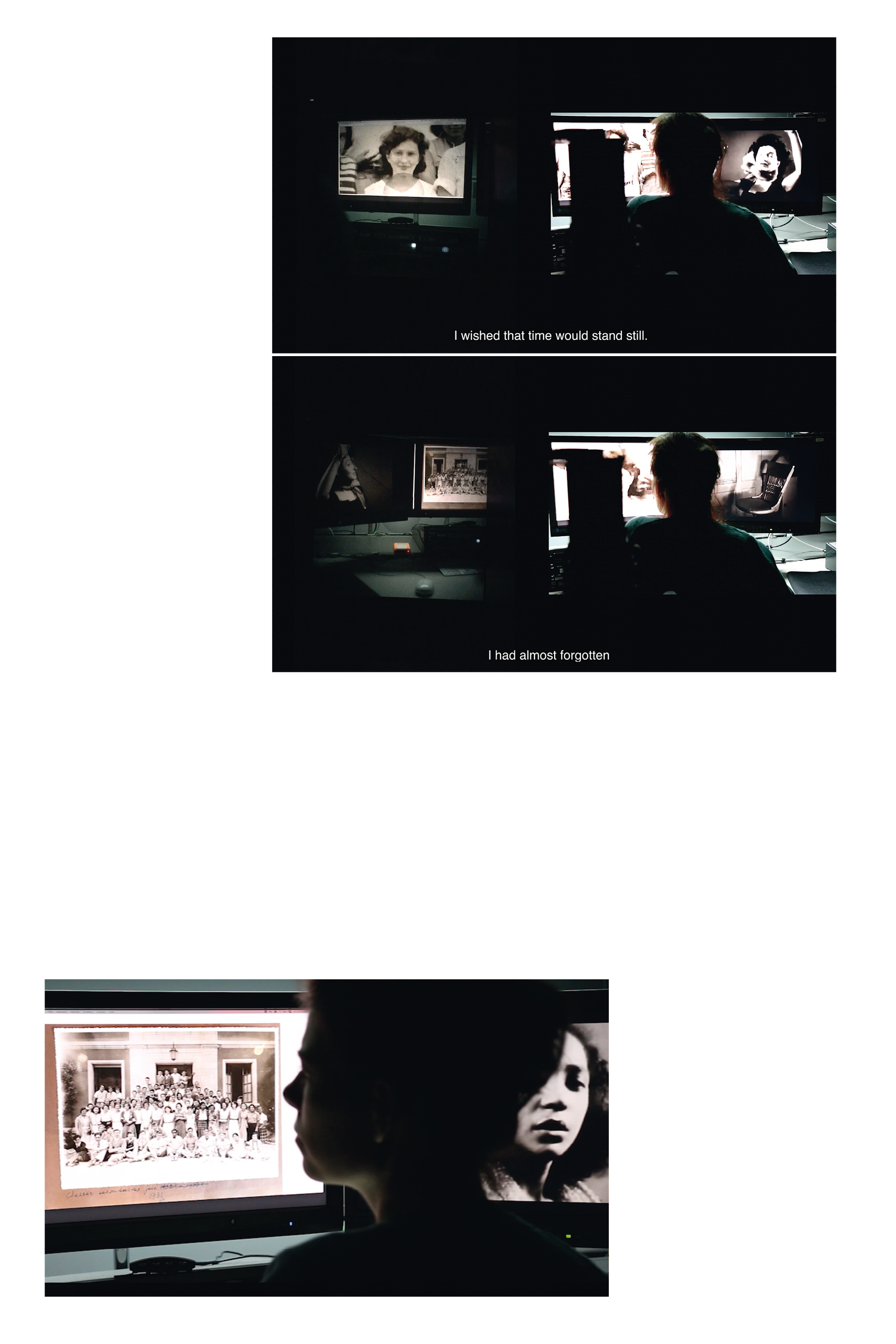

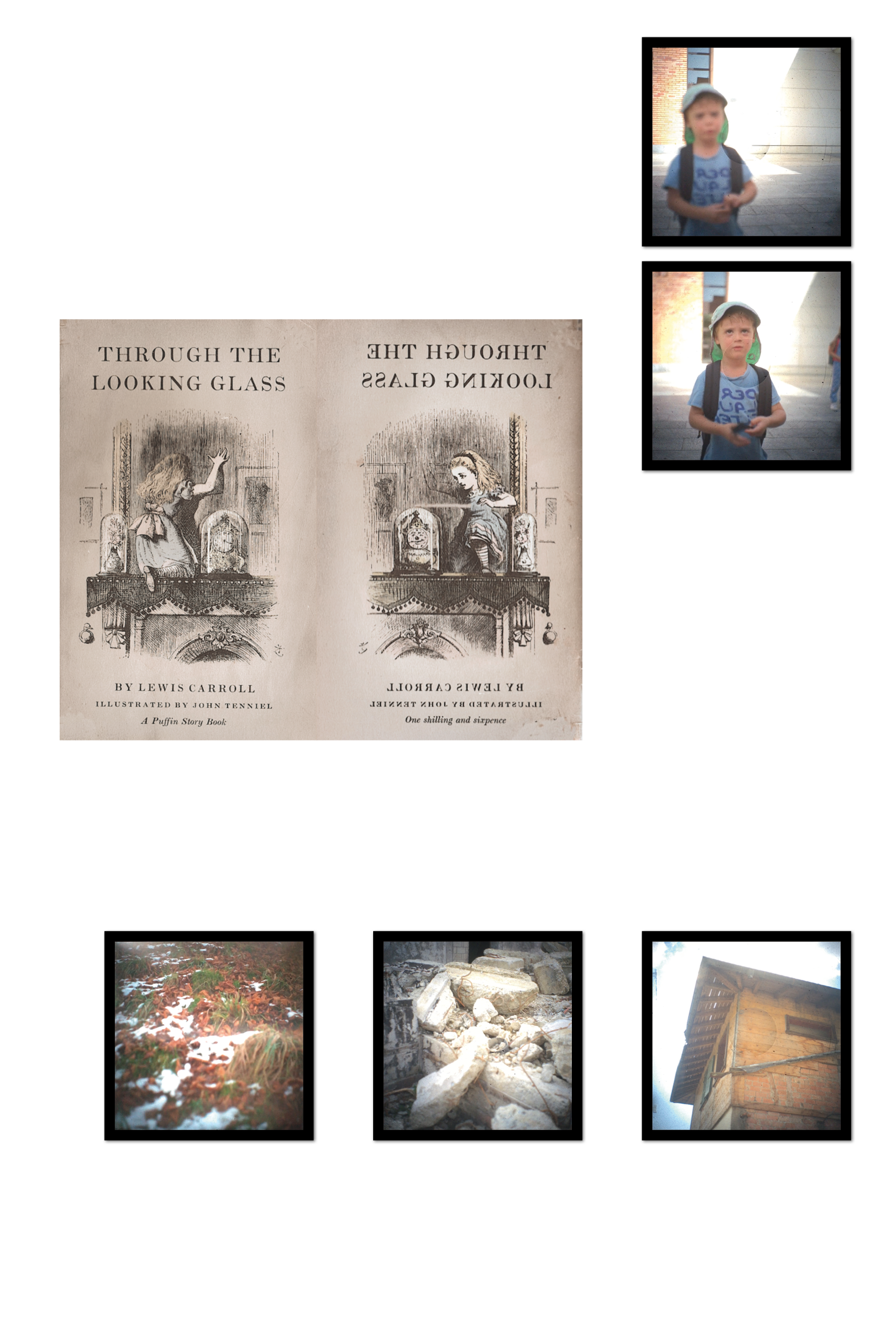

What was once an innovation when the first video cameras emerged has by now, with smartphone cameras, become something we take for granted: images and sounds are recorded simultaneously in a single, compact format. The looking glass camera, too, automatically records sound digitally alongside the images, because it consists of a mobile phone camera which films what is seen through the viewfinder of a medium format camera [V is for Viewfinder]. But the sound quality is low, because the microphone of the mobile phone camera points in the wrong direction, as well as being partly covered up by the way in which it is attached to the camera’s body. This led to a more deliberate and consistent approach to audiovisual split as an artistic approach. At the beginning of the editing process, I cut the original sound at the same time, meaning that I worked with the audiovisual material synchronously, but even then, the soundtrack remained secondary. The luminosity of the images exerted a force of attraction, so that sounds seemed to recede into the background or be blocked out, or, to describe it in more acoustic terms: my hearing was muffled. Not in the sense of being less able to take in sound, but rather as concentration through reduction: I was listening to the images. What happens to the image when the sound is omitted? What do we hear nevertheless? What do we see differently? What role does hearing play in our perception of time? When I muted all the original sound in the medium-format recordings during the editing process, I found that different relationships emerged between sound and attention, between sound and memory, and between sound and atmosphere, which proved crucial for temporal perceptions.

How is our attention shifted by a dripping gutter? Which associations are evoked by the shrill chirruping of the swallows? Where does our concentration go when an alarm sounds? When such questions were approached through film, asynchronous montage techniques developed, which shift between synchronous and non-synchronous modes of perception.

“While the synchronized ear-eye depends upon ‘clock time’ and ‘coeval presence,’ the non-synchronous presumes to clearly separate ear and eye, placing them in distinct temporal and spatial zones, creating a relation of action-reaction, of randomness or commentary. In contrast, the asynchronous moves in between these two modalities, offering an ear-eye sometimes ‘in sync’, sometimes not. Aporetic gaps, uncertainties, disruptions, and durations are crucial.”59

Asynchronicity between image and sound goes against our habits of seeing. The alienation caused when sound is delayed, brought forward, or cut out brings doubts about ‘natural perception’ into the foreground. Because of the bad sound recording quality of the mobile phone camera, the looking glass camera necessitates a deliberate and selective use of sync-sound and thus leads to an auditory alienation effect: the mainly silent images allow us to sense that immediacy is not the key to understanding here. At the same time, occasional sounds in the montage – such as the dripping of water, the twittering of swallows, or the ringing of an alarm – accentuate auditory modes of temporal perception.