On KVSwalk

Introduction

KVSwalk is an exercise in finding an identity for the computer performer in an ensemble context situation. While selecting a number of personal artistic projects dealing with notions of the body in computer music performance, and specifically with the idea of the disassociation of physical action and sonic manifestation, I realised that I had been trying to approach this problem from a research perspective, and failed to communicate it to other musicians. I sought a definition of a computer performer and asked how such an individual could be shaped – how skills might be identified and learned from traditional instrumentalists and applied to computer music practice.

Initially, the idea of studying the role of the computer music performer was approached by dividing the roles demanded by computer music practice. First, there is the compositional aspect: He or she should be informed by composing electroacoustic music with special focus on the interaction between electronic and traditional instruments. In addition, the creation of textures and timbres is part of the composer’s tasks, as is the generation of structures and the creative conceptualisation of dependence and interdependence between electronic and traditional instruments. Eventually, the development of notational systems pertinent to the computer-based instrument and its performer might also become part of the task.

Second, there is the role of the instrument designer and the technical skills required to develop the instruments and controlling devices, which are necessary to unfold compositional structures in front of an audience and in collaboration with other musicians. Decisions taken at a design level influence and determine the roles of the performer of such instruments by establishing the potentials and constraints of the computer system, as well as the gestures and actions to be used.

The third role, that of the performer, condenses both the compositional and instrument design decision making when determining the relationships between materials and actions, and activates them in the concert situation.

The study of these roles was approached pragmatically, with the aim of being informed as much as possible by traditional musical practices (instrumental composition, instrument building and performance). The clash between this initial, idealistic approach to connect traditional instrumental repertoire and practices with the experiences of performing repertoire conceived, or adapted, for live electronics, led me to the project I present here.

In the context of composing, I started investigating three different ways of defining musical structure by regarding composition as the creation of a structure or grid, or a time-length to be filled in by sound events and interactions between them. First, I looked into different music traditions and their approaches to combining musical structure and improvisation (e.g., the Real Book)1 and, second, into the fixing of improvisation as composition, a frequently met practice in electronic music creation: going into the studio, starting sound generation and manipulation processes, recording these results and later establishing architectonic and time organisation schemas.

The third approach, the one I discuss in this chapter, is the notion of the sonification of non-musical information. Sonification, as understood by the scientific community, can be defined as “the transformation of data relations into perceived relations in an acoustic signal for the purposes of facilitating communication or interpretation.” (Hermann 2010: n.p.) KVSwalk is presented as a way of using sonification procedures to structure composition and, in turn, apply these procedures in a live performance situation.

1 A collection of lead sheets of jazz standards started by Berklee college students during the late 1970s.

Larry Polansky introduces the term manifestation to try to differentiate sonification with scientific and illustrative purposes from those endeavours with artistic pretensions. For Polansky, the deviation from a purely functional manifestation of data into sound is essential for the understanding of the artistic nature of a sonification procedure (or in his words, a manifestation). He elaborates: “There is no canon of art which necessitates ‘efficiency,’ ‘economy,’ or even, to play devil’s advocate, ‘clarity.’ While a great many artists would agree that these are desirable qualities, art in general has no such rules, requirements. While these notions might be (and are) useful starting points for many beginning artists, and pedagogically productive, once established as principles they must of necessity be confounded or least manipulated by most working artists”. (Polansky 2002: n.p.)

Particular takes on the notion of sonification can be found in the work of artists such as Gerhard Eckel, who understand creative sonification as the articulator for mapping strategies between physical movement and sound3 and that of Andrea Polli, who works in collaboration with atmospheric scientists to develop systems for understanding storm and climate through sound using sonification procedures.4

In a simplified fashion, sonification procedures have the following steps:

- Selection of input data

- Definition of parameters

- Mapping (and scaling)

- Realisation

As an example of my own attempts at data sonification, Tellura (2003–2004) uses data from seismographic stations – telluric activity detected in five different submarine and terrestrial locations around the world. My main interest in developing this piece using sonification procedures was to be able to create a result that would resonate with the original source of the data. Thus, in choosing data connected to earthquakes, I intended that the final sound world and structure of the piece would reflect this by resembling the imaginary sound world of an earthquake.

As a composer, I found it important to take this approach, given that the risk of disconnection between the source of the data used and the final sound result was one of my main criticisms of sonification as a compositional procedure. I sought to find a middle ground between the notion of conveying information about the origins of the data and the creation of a musical structure.

The main limitation I found with this method of working was that I consistently failed when trying to merge the aesthetic and structural results from my sonification work together with writing for traditional instruments.

My transition towards an understanding of how I could manipulate traditional instruments into coming closer to the sound world I was creating with sonification procedures began when I focused on the performative aspect of the material I was working with. This entailed analysing and stretching myself as if I were a traditional instrumentalist, while still being able to produce similar sounds to those generated electronically by sonification procedures.

2 Xenakis mentions Concret PH as part of a series of works where he used stochastic principles to define musical structures: “Stochastics is valuable not only in instrumental music, but also in electromagnetic music. We have demonstrated this with several works: Diamorphoses 1957–58 (B.A.M. Paris), Concret PH (in the Philips Pavilion at the Brussels Exhibition, 1958); and Orient-Occident, music for the film of the same name by E. Fulchignoni, produced by UNESCO III 1960.”(Xenakis 1992: 43)

3 Eckel and his collaborators have developed the notion of using physical movement as auditory feedback, and applied it for both artistic and medical purposes. For more information visit http://iem.at/~eckel/science/science.html.

4 For more on Andrea Polli and her projects, visit http://www.andreapolli.com/.

Context

Some antecedents of data sonification

Without claiming it is a real sonification example, Iannis Xenakis’s early orchestral works might illustrate the intention to derive musical structure from extra-musical material. For example, in Pithoprakta (1956), he aimed to construct a musical analogue for the motion and collision of gas molecules, on the basis of the idea that, although it is not possible to determine the motion of an individual particle, it is possible to find the average for the change of position of a cluster of particles over a discrete period of time, and, therefore, to measure an average speed behaviour, which is a mathematical description of the temperature of the gas. Xenakis mapped data of this kind onto a graph of pitch versus time, which was applied to the fingerboard and bow positions for an ensemble of string instruments.

I consider this piece to be a direct antecedent of what in electronic music was later termed sonification. Xenakis himself used similar procedures to translate data into sound in composing Concret PH (1958), a work that could be considered as something between a musical composition and a sound installation, and which was created to be played as an interlude between performances of Poème électronique (1958) (an audiovisual work by Edgard Varèse and Le Corbusier) inside the Philips Pavilion at the 1958 Expo in Brussels.2 I mention Xenakis and Concret PH in order to frame each of my projects in relation to a particular aesthetic context. In other words, the music and procedures for music creation that I want to present operate either as influenced by or in resistance to a particular work or artist.

In electroacoustic music, sonification is often used to describe musical structure on the basis of non-musical data. Composers such as Ed Childs presented sonification as the scientific representation of data into sound. Possible distinctions between kinds of sonification can be seen in the following categorisation (Burk, Polansky, Repetto, Roberts and Rockmore 2011: n.p.):

Iannis Xenakis: Phitoprakta (excerpt). Performed by Orchestre National de l'O.R.T.F, conducted by Maurice Le Roux. Le Chant du Monde, LDX-A 8368.

- audification: the direct rendering of digital data in (usually) sub-audio frequencies to the audible range, using resampling. Example: speeding up an hour of seismological data to play in a second.

- auditory icons/earcons: using sound in computer GUIs (graphical user interfaces) and other technological interfaces to orient users to menu depth, error conditions, and so on. For example, a cell phone company could design an AUI (auditory user interface) for its cell phones so that users do not have to look at the little LCD display while driving to select the desired function.

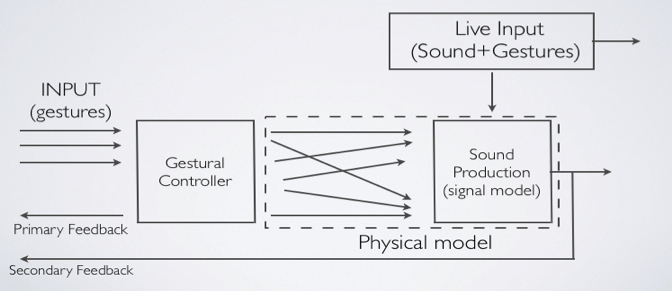

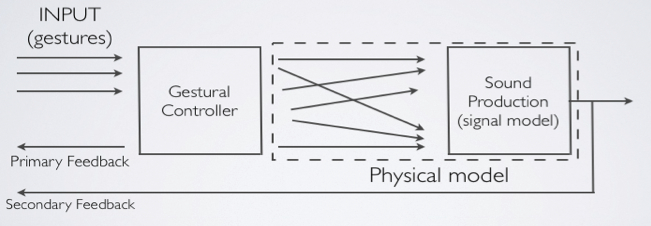

I have been working towards adapting both Wanderley’s and my own models of instrument design into musical composition and performance, presenting them as the definition of musicianship in live computer music with the primary intention of collaborating with traditional instrumentalists, both in the interpretation of pre-existing repertoire, as in the performance of new compositions. To do so, I extended Wanderley’s model by adding live-input, both sound and gesture. This is to say, a traditional instrumentalist (e.g., a violinist) interferes in the model of the digital instrument, rather than the other way around.

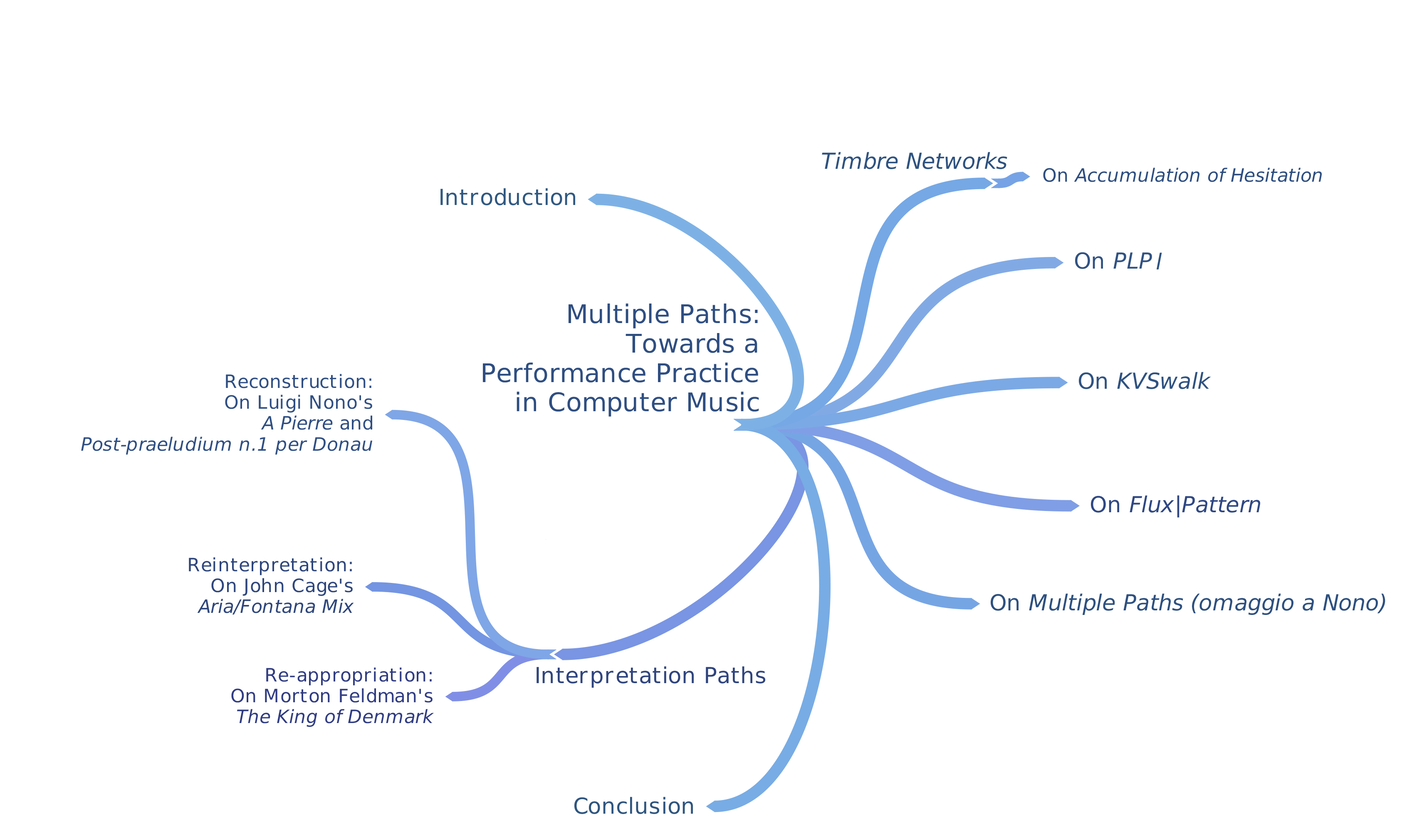

I developed the notion of Timbre Networks in an attempt to systematise these procedures, moving away from this metaphor of interference when dealing with existing repertoire for traditional instruments and electronic media and towards an integral model of creation and performance of live computer music. In other words, I aimed to create an instrument which itself was a composition, and which demanded a performance practice that would allow it to be included in different performative contexts.

The concept of Timbre Networks was a way to describe interactions between a number of (musical) nodes in a musical structure. By finding correlations between several definitions of timbre, texture and network, the goal was to present possible relationships between the computer as a tool and other musical instruments: as a self-contained instrument, as a signal processor, and as a tool for the analysis and scaling of incoming data. It also aimed to incorporate the decision-making tasks of the composer into the performative arena, both for the electronic performer and the traditional instrumentalists involved in the network. Together, each performer’s part of the network would contribute by exploring and playing with:

- Texture composition

- Texture manipulation

- Timbre flexibility

- Interdependence of musical and physical gestures

An example of a self-contained timbre network is my Accumulation of Hesitation (AoH) from 2008, where I used a Max/MSP port of GENDY, a digital implementation of the dynamic stochastic synthesis algorithm conceived by Iannis Xenakis and described in his book Formalized Music (1992), to generate both the initial sound materials and the control streams for their signal processing. Additional sound material and control information for AoH was generated by a feedback circuit, using a no-input mixer5 as a live instrument.

After my experience with developing the concept of Timbre Networks, I realised that I was aiming to present the potential of the computer as a musical instrument while also finding a common ground with which to interact with traditional instrumentalists. This second aspect began to take more concrete shape through collaborative work with Mieko Kanno, Catherine Laws and Stefan Östersjö which led to the creation of KVSwalk.

The main limitation I found with this method of working was that I consistently failed when trying to merge the aesthetic and structural results from my sonification work together with writing for traditional instruments. My transition towards an understanding of how I could manipulate traditional instruments into coming closer to the sound world I was creating with sonification procedures began when I focused on the performative aspect of the material I was working with. This entailed analysing and stretching myself as if I were a traditional instrumentalist, while still being able to produce similar sounds to those generated electronically by sonification procedures.

The status quo of performance in live electronic music

In order to apply sonification procedures in a live performance context, I first had to revise the demands of the electronic performer in the existing repertoire for ensemble and live electronics.

I started investigating repertoire in which an electronic performer was deemed part of an ensemble. I realised that, in most cases I encountered as a performer, the contribution demanded from an electronic practitioner in the existing repertoire was often limited to live-triggering pre-recorded sound files, acting as a human score-follower to cue the starting and stopping of live processing of traditional instruments, and being a mixing technician who controls the balance between the instrumental and electronic sound sources. I am pointing at works by, for example, Kaija Saariaho (Six Japanese Gardens, 1994; Vent Nocturne, 2006), Philippe Manoury (Partita I, 2006), Cort Lippe (Music for Cajon and Computer, 2011) and Richard Karpen (Strand Lines, 2007), to name a few.

At the beginning of my research, these limited tasks allowed me to work towards the development of a performance practice, mainly through reinforcing the interactive nature of the actions demanded in relation to other performers in a concert situation: executing these actions onstage and seeing how my presence affected the other performers’ and the audience’s perception of the musical event.

I tried to understand what kinds of action such a rudimentary computer performer needed to be concerned with. Focusing on the existing repertoire up to the beginning of the twenty-first century, the demands on the computer performer could be listed as follows:

- Triggering a synthetic layer.

- Dealing with onsets and offsets of the different sections in a piece (involving many physical actions with no direct sonic consequence).

- Controlling and moving sound events in the physical space (also demanding physical actions with no linear sound result).

In this threefold scenario, it is the traditional instrumentalist who provides the control information to the synthetic layer through harmonic and amplitude content. It also feeds the Digital Signal Processing (DSP) component of a piece.

This is the way most repertoire for traditional instruments and live-electronics still operates; however, by performing these pieces, one can be confronted with certain technical issues and limitations. First, there is the logistical difficulty of signal extraction in a live performance situation. This is normally overcome by using a pickup or a microphone, where very small placement differences significantly influence the overall behaviour of the instrument-computer system; however, such differences are to be expected in a real-life scenario, such as at a festival, where the setup needs to be changed rapidly between performances.

Second, there is the confusion of signal analysis (i.e., the extraction of pitch and amplitude information in real time from a performer) with musical analysis (which would suggest the ability of the system to understand and judge the musical nuances produced by the decision-making of a performer). This misconception effectively demotes the traditional performer, however virtuosic, to the role of generating pitch and amplitude events over time.

This kind of musical setup risks reducing the traditional performer’s potential for expression, while also underusing the potential of another musical decision-maker, the electronic performer. Music technologist Miller Puckette and composer Cort Lippe were already concerned with the idea that, for these pieces to be genuinely responsive and to be appreciated by audiences as being generated by the decision-making of the musicians involved, they were forced to reduce the instrumentalists’ input only to pitch and dynamic: “We can now provide an instrumentalist with a high degree of timing control, and a certain level of expressive control over an electronic score. [...] A dynamic relationship between performer, musical material, and the computer can become an important aspect of the man/machine interface for the composer, performer, and listener, in an environment where musical expression is used to control an electronic score.” (Lippe and Puckette 1994: 64)

I proposed to help contribute to what this “musical expression” might mean by enhancing the decision-making potential of the electronic part, even in pieces where the electronic part was designed to be autonomous by responding to the signal of the traditional performer. I returned to the ideas I used when creating a method for my sonification pieces, and defined a very simple and systematic procedure:

- Design of the instrument

- Selection of the repertoire

- Definition of the instrumentation

- Realisation

Following Marcelo Wanderley’s model for digital instrument design, which focuses on describing the performer’s “expert interaction by means of the use of input devices to control real-time sound synthesis software” (Wanderley 2001: 3), one can split the task of creating the instrument into two components: software and hardware. The software side includes composing or programming the software environment, defining the tasks and limitations of the instrument and the parametrisation of the instrument. The hardware side includes the design of the gesture acquisition interface (for example, hand motions, hitting keys), the design of the gesture-acquisition platform (sensors, cameras, trigger interfaces) and analogue-to-digital conversion for the gestures.

5 A musical instrument first made popular by Japanese sound artist Toshimaru Nakamura, a no-input mixer is made by connecting the outputs of a sound mixing board into their inputs, generating a series of feedback loops in the mixer’s circuit, which in turn can be used as a somewhat crude and unpredictable electronic instrument, when compared to pitch-graded electronic instruments.

Incorporating the concept of the Kármán vortex street in the structuring of my collaborative work with Kanno, Laws and Östersjö was a response to the challenge of relating to sonification as a compositional tradition in electronic music. It allowed me to understand sonification in relation to the idea of embodiment as transformation and assimilation, and to explore how I could bring this primarily studio-based compositional practice into a live performance dimension. In short, I asked myself how a sonification piece could be presented in performance, with all the procedures, data capturing, scaling and mapping exposed in a concert situation.

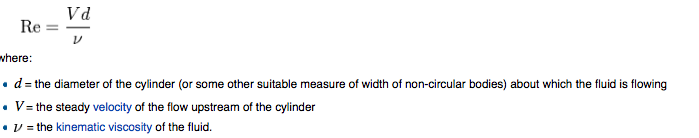

Procedure

I designed a spatialisation and dynamic control algorithm based on the formula of the Kármán vortex street phenomenon. This phenomenon occurs when the flow of a fluid is interrupted by a cylinder-like object, which generates a train of vortices in a zigzag pattern, rotating in opposite directions. In nature this phenomenon may be seen in water flow where streams are interrupted by a mountain. There are numerous examples of audible manifestations of the phenomenon: the singing of telephone lines, or the ascending high-frequency noise audible during a plane landing in which high and low pressures interact with each other in nature, which in this case creates a particular shape – a train of vortices. While performing KVSwalk, I manually trigger a new start of the Kármán vortex street with each new instrument incorporated in the piece, which initiates the incoming instrument signal moving in the physical space at a rate determined by the harmonic spectrum of the instruments used.

The manipulation of sound in space

On the basis of the work of mechanical engineers Lionel Espeyrac and Stéphane Pascaud (2001), who designed a digital model of the traditional method used in physics to create the Kármán vortex street, I developed a spatialisation system to distribute streams of processed sound material over a virtual auditory plane following the motion and energy loss of the vortex model. The sound streams were created by analysing incoming (instrumental) sound and freezing its spectral component to simulate a continuous sonic flow. For each flow, the direction of the vortex (clockwise or counterclockwise) was left open to the decision of the electronic performer. The duration of each process was dependent on the initial energy (average amplitude and attacks over the duration of a specific time window). An additional aspect of the algorithm left to the control of the electronic performer was the determining of the initial location of each stream. By default, each instrumentalist would feed a vortex that would start in a loudspeaker located opposite his or her position on stage, but its routing could be interfered with by the electronic performer at any time.

The final control parameter of the spatialisation system was the dynamic scaling of the frozen sound streams. Before the triggering of a new stream, the performer selected whether the centre frequency of the incoming instrument would remain unaltered or would be scaled to either 0.5 or 4 times its value, a decision that affected the timbral qualities of the electronic sound streams (by transposing them an octave lower or four octaves higher), while the duration of the processes and its energy fluctuations remained unchanged.

Project

To integrate the compositional ideas defined in Timbre Networks with the interest of applying sonification procedures in live performance, I sought a unifying concept that could encompass dealing with the relationship between instrument and performer, the translation of data from one media into another and the use of mapping strategies as a unifier of both.

The notion I used to articulate this project was that of embodiment, defined as “how the body and its interactive processes, such as perception or cultural acquisition through the senses, aid, enhance or interfere with the development of the human condition” (Farr, Price and Jewitt 2012: 6); therefore, I understood the body as a possible agent when dealing with the assimilation and translation of concepts. I propose then to consider procedures of sonification and/or visualisation as valid processes of embodiment as understood by Farr, Price and Jewitt. When translating data into sonic or visual streams of information, the interaction between the data and the translating agents will produce the same interferences as when the human body is the agent of assimilation.

My interest in understanding possible interpretations of the notion of embodiment, and how these could be articulated in sound turned into the starting point for the collaboration process leading to KVSwalk. To structure the piece, I considered embodiment as physicality, as assimilation and as transformation, and sought to create different sound layers connected to these notions. Simultaneously, I wanted to articulate how these layers interacted with one another using an external shape. I chose to implement the Kármán vortex street formula (Kármán 1954: 76), used in fluid dynamics, as an algorithm for spatialisation and dynamic control because of its actual shape and motion.

I chose it also for its metaphorical potential and for the sonic derivatives that it suggested, given its manifestation in nature both physically (in cloud formations) and aurally (in the fluctuating pitch of electricity power lines).

The instrumental sounds

To create the initial sound objects for KVSwalk, I followed a similar path to the one used in Tellura. The main difference was that the generation of sound material, created using only synthetic algorithms in Tellura, was conceived in KVSwalk as a task for a traditional instrumental ensemble.

Following the notion of embodied know-how, where the “inherently synesthetic as well as multi-modal nature of the human being, by patiently integrating sensorimotor, intellectual and embodied capacities toward expert artistic skill in a specific domain, emerges” (Coessens 2009: 272), I attempted to create for the performers a conceptual distance from their embodied instrumental knowledge and replaced it with more abstract, gestural and motion-oriented tasks, creating a set of initial materials that I could treat as sound-objects with an unspecified duration.

At the same time, this artificial distance between the requested actions and the resulting sounds allowed me to reorganise the collection of materials according to timbral and harmonic similarity, regardless of the instrument. The process of exploration focused on the physical motions of the performers on their instruments, levelling the pitch material (by using a single pitch as the central tone, as well as for departure and arrival of pitch-lines) and testing different ways of creating bridges between the timbres of the different instruments, either through performance (extended techniques) or artefacts (such as tin foil) added to the instruments like prepared piano accessories.

After a period of experimentation, the selection of material was made by grouping sound events according to the similarity or difference between the physical action performed to excite the instrument and the sonic result. In doing this, some events that sounded alike required very different actions to be produced. On the other hand, imitating physical actions on different instrument would lead to sound results with varying similarity among them.

I distributed the selected material in a time line for each instrument, following these two axes:

1. (Physical) gesture similarity < > (sonic) gesture difference

2. (Sonic) gesture similarity < > (physical) gesture difference

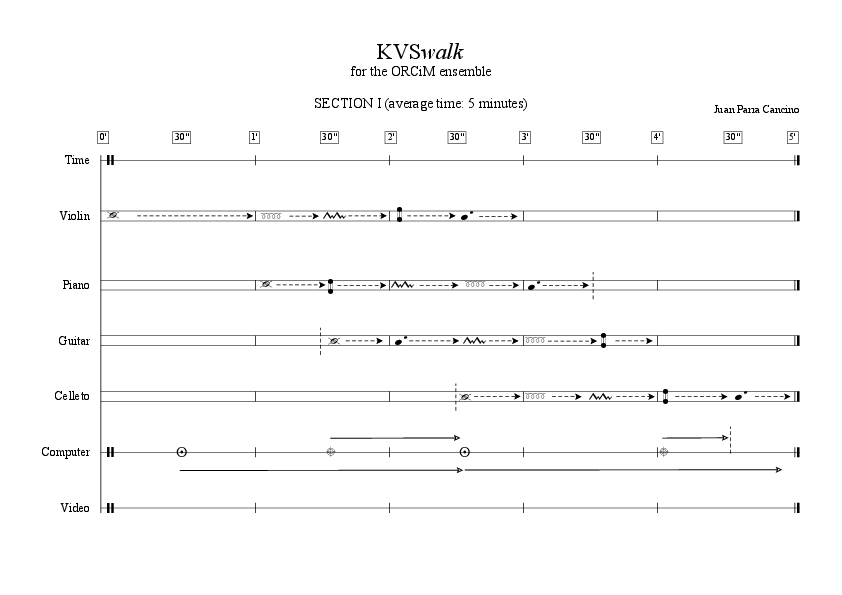

This experimentation and selection process was carried out with the invaluable collaboration of Mieko Kanno (violin), Catherine Laws (piano) and Stefan Östersjö (guitar), in a series of meetings over a period of four months. A parallel process was conducted online with Chris Chafe, composer, cellist and designer of the celleto, a hybrid electronic instrument derived from the cello, which he used for his collaboration in this project. The resulting material was then arranged in a score in which each instrumental action and each gesture would be sonically different from its predecessor, and at any given moment during the piece the physicality involved would be as (metaphorically) connected as possible with the other ongoing (physical) gestures. Alongside this, a first (instrumental-only) structure for the piece was completed, in which every meta-gesture (that is to say, a gesture in both physical and sonic senses of the word) was assigned a symbol and a guideline description for its performance.

Gesture groups:

I >

Violin: Brushing of the bow over strings, without producing a clear pitch.

Piano: Brushing of the strings in the soundboard, without producing a clear pitch.

Guitar: Performed muted attacks progressively increasing speed, moving towards a fast tremolo.

Celleto: Brushing of the bow over strings, without producing a clear pitch.

II >

Violin: Pizzicato on G (any octave).

Piano: Play high G on any octave over a pedal on the lowest G.

Guitar: Simulate a continuous glissando, starting and finishing in G.

Celleto: Pizzicato on G (any octave).

III >

Violin: Undetermined pitch. Continuous, yet irregular bow weight.

Piano: Random “walk” of hands over keys.

Guitar: Irregular excitation of strings, from upper to lower register. End with a slowdown on the lower octave.

Celleto: Undetermined pitch. Continuous, yet irregular bow weight.

IV >

Violin: Ricochet, use aluminium foil on fingerboard.

Piano: Scattered notes with aluminium foil on soundboard.

Guitar: Transition from pitch-less tremolo to natural harmonics with G as the fundamental frequency.

Celleto: Ricochet, use aluminium foil on fingerboard.

V >

Violin: Searching for "unattainable" harmonics (undetermined pitch).

Piano: Play weak-action clusters around G.

Guitar: Transition from tapped tremolo around G (higher registers) towards low-registered phrases using aluminium foil on fretboard.

Celleto: Searching for "unattainable" harmonics (undetermined pitch).

Structure

Once the initial instrumental skeleton of the piece and of the sound processing and spatialisation algorithms had been completed, the next task was to define structural pivot-points for interaction between the instruments and the electronic components of the piece. To reinforce the metaphoric gesture of the Kármán vortex street as a series of energy impulses that should propagate in a somewhat cyclical motion, I had the idea to extend the exploratory nature of the piece by revisiting the concept of embodiment as a new source to inform different materials and layers.

As I stated before, two possible interpretations of the notion of embodiment could work simultaneously on different levels – the physical relationship between the performer and his or her instrument or the assimilation and transformation of non-musical ideas into sonic and visual manifestations. KVSwalk attempted to shed light on how the multiple meanings of the concept of embodiment in electronic music could be interpreted, and how these could be used to create different musical materials and help structure a composition. It was at this point that I incorporated new layers of material (video, additional electronics) in order to sonify and visualise these different processes.

A map of the piece: multiple meanings for multiple layers

After having described the five gesture groups that constitute the basic instrumental material for KVSwalk, I will now present the five layers that together formed the structure of the piece. The final version of KVSwalk contains five layers of musical information, each of which responds to a different approach/experiment/exploration of a particular notion of embodiment.

- (1) Instrumental layer (gesture groups)

This layer consists of the physical gestures required to produce sound on a particular instrument, and experiments with finding points of connection between the physicality required to produce sound by each instrument and the timbral similarity of the resulting sounds.

- (2) (Networked) instrumental layer

This layer is concerned with the same interpretation of embodiment, but sheds light on the (potential) change in perception when the visual and auditive elements are disengaged. It is presented here as the first networked element of the piece: Chris Chafe (celleto) was faced with the same challenges as the other instrumentalists, but his contribution differs from the other players due to his physical absence on stage. Dealing with only the sonic result of the physical gesture aims to raise new challenges and questions when setting up a collective performance situation, and intends to explore the limits and possibilities of disembodied chamber music interaction.

- (3) Computer signal processing layer

This layer focuses on bridging two different embodiments: the manifestation (in sound) of a concept (the Kármán vortex street phenomena) and the relationship of the performer’s body to an instrument (the computer).

- (4) Video layer

This layer deals with embodiment as translation from a form or concept into another medium (visualisation as embodiment). This process was done by analysing certain parameters of the live performance (amplitude thresholds and deviation from the centre tone), and mapping the outgoing values in a two-dimensional space.

- (5) (Networked) computer synthesis layer

This layer is an experiment in transliteration (a sonification of the visualisation process). The resulting images and motions of the video layer were translated into linear data streams and translated into sound by a computer synthesis engine programmed by Henry Vega in the SuperCollider environment.

While the initial instrumental material and its processing and spatialisation form the structural backbone of the piece, the intention with the additional layers was to manifest in sound (or image) a complementary (while somewhat different) notion of embodiment which would preserve the overall metaphorical identity of the Kármán vortex street: an expansive, cyclic energy-losing motion.

Computer symbols:

Manually triggered synthesizer engine (a low G1 sine-tone tuned at 48.9 Hertz)

Manually triggered “grains” ( activation of a granular reverb engine, with two different transposition presets: one at 2, 4 and 6 octaves above and 2 octaves below the fundamental frequency, and one at 3, 9 and 12 octaves above).

Solo versions

The next stage in the composition process was to create a test-version of the piece. This solo performance version, using recorded fragments of the instrumental material and the signal processing and spatialisation computer system, was presented in performance as a way of both refining the proportions of each section of the time structure of the piece and developing different performance strategies for the electronic part.

To compensate for the lack of interpretative nuances in the pre-recorded instrumental fragments, I designed a collection of timbre and articulation gestures for the computer processing part relative to the instrumental collection, which could give a dedicated performer three key controls over the processing behaviour, while preserving a close dependence upon the interpretative nuances of the instrumental recording:

- Initialisation/interruption of overall gestures.

- Dynamic control over the speed and direction of predefined spatialisation trajectories.

- Dynamic control over the deviation in pitch of the processed sound in relation to the incoming instrumental gesture, producing the illusion of perspectival depth.

During the preliminary tests, it became clear that the inflexibility of recorded fragments of the instrumental sounds caused a somewhat predictable performance, so I replaced the initial sound material (the recorded instruments) with electronic sounds.

A first implementation of the solo computer version of KVSwalk was tested using a very rudimentary synthesis setup as its input instrument: a no-input mixer, capable of generating three distinct sets of controlled feedback, a resonator bank on a fixed-frequency setting, and a noise generator. At this point two additional symbols were added to the working score.

KVSwalkSOLO entailed the specific design and construction of a physical controller for the computer part. One of the two elements of this controller requires physical actions, which are comparable to those used in various forms of traditional instrumental playing, while the other, which is used for controlling the spatialisation algorithm, is manipulated using more theatrical hand gestures. This portion of the project was a collaboration with Lex van den Broek, head of the technical department of the Royal Conservatoire of The Hague.6 The intention here was to create a closer relationship between physical actions and sonic results, considering that this relationship has not always been considered while designing computer music interfaces in the past.

KVSwalkSOLO features a mixed setup consisting of an analogue and digital sound-generation engine and a custom controller capable of capturing at least four continuous-control signals, coupled to four synthesis engines and four discrete audio outputs. Ideally, the time and voice structure calls for five high resolution physical sensors (one ultrasound, three pressure and one heat sensor), five synthesis engines routed to five filtering engines, a circular-motion spatial distribution system, and a 5.1 audio output setup.

KVSwalkSOLO was premiered during the Raflost 2010 festival in Reykjavik, Iceland, and subsequently performed in Florence, Padova, Narbonne, Santiago de Chile, Sao Paulo and Tallinn. After these experiences, I had the opportunity to focus again on the relationship between these new sound materials, the instrumental material (still as sound recordings) and the processing and spatialisation system. This second period of experimentation took place at the Pompeu Fabra University in Barcelona, using the Reactable system, an electronic music instrument and environment developed since 2003 by a group of researchers at that university.7

The Reactable version of KVSwalk included recordings of the instrumental layers and an adaptation of the control and sound manipulations of the spatialisation algorithm designed for both the ensemble and solo versions. KVSwalkReactable was presented during the SMC 2010 conference in Barcelona, on 23 July 2010.

KVSwalkReactable. Performance by Juan Parra Cancino.

(To listen to this clip, the use of headphones is highly recommended).

6 For more on Lex van den Broek’s work, see www.ipson.nl.

7 For more information on the Reactable and its current, multiple iterations, visit http://www.reactable.com.

The purpose of the (networked) computer synthesis layer, generated and controlled through a dedicated computer network by Henry Vega, is to translate the above-described process back into sound. This was achieved by transforming data generated by the visual materials of Schwab – specifically pixel position and trajectories – into OSC8 streams of numbers, sent through a dedicated web server to Vega’s computer, in which these flows of data were sonified using a synthesis engine developed in the SuperCollider programming environment.

The technical issues met in setting up this final system created the opportunity for a different way of rehearsing: it was only at the final sound check that performers in all three locations were audible in the concert hall, in which situation the visual and auditory feedback for the musicians was challenged by the needs of the visual layer of the piece. As a way of solving some of these issues, the computer performer on stage took on the responsibility of dynamically adapting the balance between the sounding layers to highlight the structure of the piece through what can be considered subtle conducting, thereby preserving control over amplification, spatial distribution, and the cueing of global sections.

KVSwalk was premiered by the ORCiM ensemble (Mieko Kanno, Catherine Laws, Stefan Östersjö, and Michael Schwab), Henry Vega and Chris Chafe, during the ORCiM Festival in Ghent, Belgium, on 16 September 2010.

The ensemble version

Parallel to the work on the solo versions, additional layers for the ensemble version of the piece were in the process of being created. The next two layers of the piece were creative collaborations with visual artist Michael Schwab and composer and computer performer Henry Vega. For the video layer, Michael Schwab (with the programming assistance of David Pirró) created a visual interpretation of the data generated from the sound processing layer of the piece. Combining the concept of the Kármán vortex street and the sound parameter information, Schwab’s layer brings together and blurs the distinction between the available conceptual and inceptual information in the process of translating them into the visual domain.

Once all the components (or layers) of the piece were in place, we rehearsed and prepared the final performance version. This process served to define the framework for a piece whose fragile nature slowly manifested itself: a somewhat fragmented set of processes of collaborative interaction, connected to one another by an implicit concept as well as by one another’s technical co-dependence.

8 OSC stands for Open Sound Control. It is a protocol for networking computers and other multimedia digital devices. For more information visit http://opensoundcontrol.org/.

Reflection

The collaboration process and creation of KVSwalk aimed to bring sonification procedures into a live performance situation, by rereading sonification as a possible interpretation of the concept of embodiment in order to integrate the sound worlds of electronic and traditional instruments. The experience of conducting such a process left me with a number of issues that have informed my subsequent work. The use of both physically present and networked performers, following the same musical guidelines, helped me reflect upon how much of the semantic value given to the relationship between body-action and sounding-output is a cultural construction rather than an intrinsic aspect of music performance. I became aware that this issue in effect undermines the initial motivation for bringing the electronic performer back on stage, a strategy I relied upon in much of my early case studies. Other aspects, such as the timbral relationship between the instruments and the electronic processes, seemed to be more important. I am happy to accept that this aspect remains as one of the unsolved motivations to keep producing and researching live computer music. How to present the participation of networked performers to the audience is an aspect to evaluate. Since there was no visual representation of these networked performers, the audience was not aware of their presence. Although this cannot be considered unsuccessful in itself, their contribution on a conceptual level – commenting on embodiment as physicality when no physicality was present – was lost.

Another aspect to reconsider in this project arose from the desire to incorporate too many simultaneous layers of interpretation of the notion of embodiment, which rendered any one of them practically ungraspable. Perhaps the most confusing element was the notion of embodiment as assimilation and transformation, and its connection not to sonification but to the translation of the sonified material into the visual domain. The visualisation of the procedure by which the spatial trajectories of the sound material was generated, using a design by Michael Schwab, and generated in real time using software programmed by David Pirró, ended up absorbing most of the attention of the audience (which is a frequently met problem in interactive pieces with video), covering the presence of multiple layers of interaction between electronics, performers (on stage and through a network environment) and the space.

Removing the video layer in later performances has helped to focus attention on the interaction between the performers and on the fragile nature of the musical texture being generated as the core of the piece. Thanks to the experience gained during the collaborative processes spawned by this project, I have developed a number of strategies to tackle similar collaborative settings where the long periods of exchanging ideas and materials have been replaced by a set of initial structural, conceptual and material pivotal points.

An example of such condensed collaborative work was the reworking of KVSwalk for an ensemble of wind instruments conformed by Terri Hron (recorder), Solomiya Moroz (flute), and Krista Martynes (clarinet). A two-day workshop, conducted at the Matralab studio of the Concordia University in Montreal, was dedicated to this version, and through a number of very simple tests it was possible to create the initial sound materials while simultaneously developing versions of the time structure. The performance took place at La Elástica, Montreal, Canada, on 16 March 2011.

I believe that the rapidity and success of this performance only became possible as a result of the long and complex collaborative setting leading to the initial ensemble version, as described above. It is the knowledge accumulated in the course of those initial collective sessions that now allows me to select and propose this piece to other ensembles with less luxurious time schedules than my original one.

KVSwalkMontreal: Terri Hron, recorder; Solomiya Moroz, flute; Krista Martynes, clarinet and Juan Parra Cancino, computer. "La Elástica", Montreal, 16 March 2011.

Evaluating this project in the light of adapting sonification strategies for a live performance situation, one can in retrospect focus on understanding embodiment as a concept with multiple meanings – as agent and as an inherent quality of the body – thus helping to articulate these meanings in music in relation to the procedures of sonification. In doing so, the separate layers of the piece, articulated by the different steps to sonification, in turn help illustrate certain aspects connected to embodiment in music – such as the relationship between physical action and sonic manifestation, and the idea of mapping as the strategy for acquisition, assimilation and transformation of data from one dimension into another. These elements will be explored further in other projects, such as Flux|Pattern and Multiple Paths.

References

Burk, Phil, Larry Polansky, Douglas Repetto, Mary Roberts and Dan Rockmore (2011).

Music and Computers: A Theoretical and Historical Approach. Retrieved 4 November 2014, from

http://music.columbia.edu/cmc/musicandcomputers/popups/chapter1/xbit_1_1.php

Coessens, Kathleen (2009). “Musical Performance and ‘Kairos’: Exploring the Time and Space of Artistic Resonance.” International Review of the Aesthetics and Sociology of Music 40/2: 269–81.

Espeyrac, Lionel and Stéphane Pascaud (2001). “Strouhal Instability – Von Karman Vortex Street.” Retrieved 4 November 2014, from

http://hmf.enseeiht.fr/travaux/CD0102/travaux/optmfn/gpfmho/01-02/grp1/index.htm

Farr, William, Sara Price and Carey Jewitt (2012). “An Introduction to Embodiment and Digital Technology Research: Interdisciplinary Themes and Perspectives.” National Centre for Research Methods working paper (pp. 1–17). Economic and Social Research Council, UK.

Hermann, Thomas (2010). “Sonification – A Definition.” Retrieved 4 November 2014, from http://sonification.de/son/definition

Kármán, Theodore von (1954). Aerodynamics. New York: McGraw-Hill.

Lippe, Cort and Miller Puckette (1994). “Getting the Acoustic Parameters from a Live Performance.” In Irène Deliège (ed.), Proceedings of the Third International Conference for Music Perception and Cognition, University of Liège, 23–27 July (pp. 63–65). Liège: European Society for the Cognitive Sciences of Music.

Polansky, Larry (2002). “Manifestation and Sonification”. Retrieved 4 November 2014 from http://eamusic.dartmouth.edu/~larry/sonification.html

Wanderley, Marcelo (2001) “Gestural Control of Music”. Proceedings of International Workshop Human Supervision and Control in Engineering and Music. Kassel, Germany, 21–24 September. Retrieved 4 November 2014, from

http://recherche.ircam.fr/equipes/analyse-synthese/wanderle/pub/kassel

Xenakis, Iannis (1992). Formalized Music: Thought and Mathematics in Composition. Revised edition. Stuyvesant, New York. Pendragon Press.