This is the end of the second semester

Click the image below to read my summary and find out how I will move forward etc.

Presentation September 7 2021

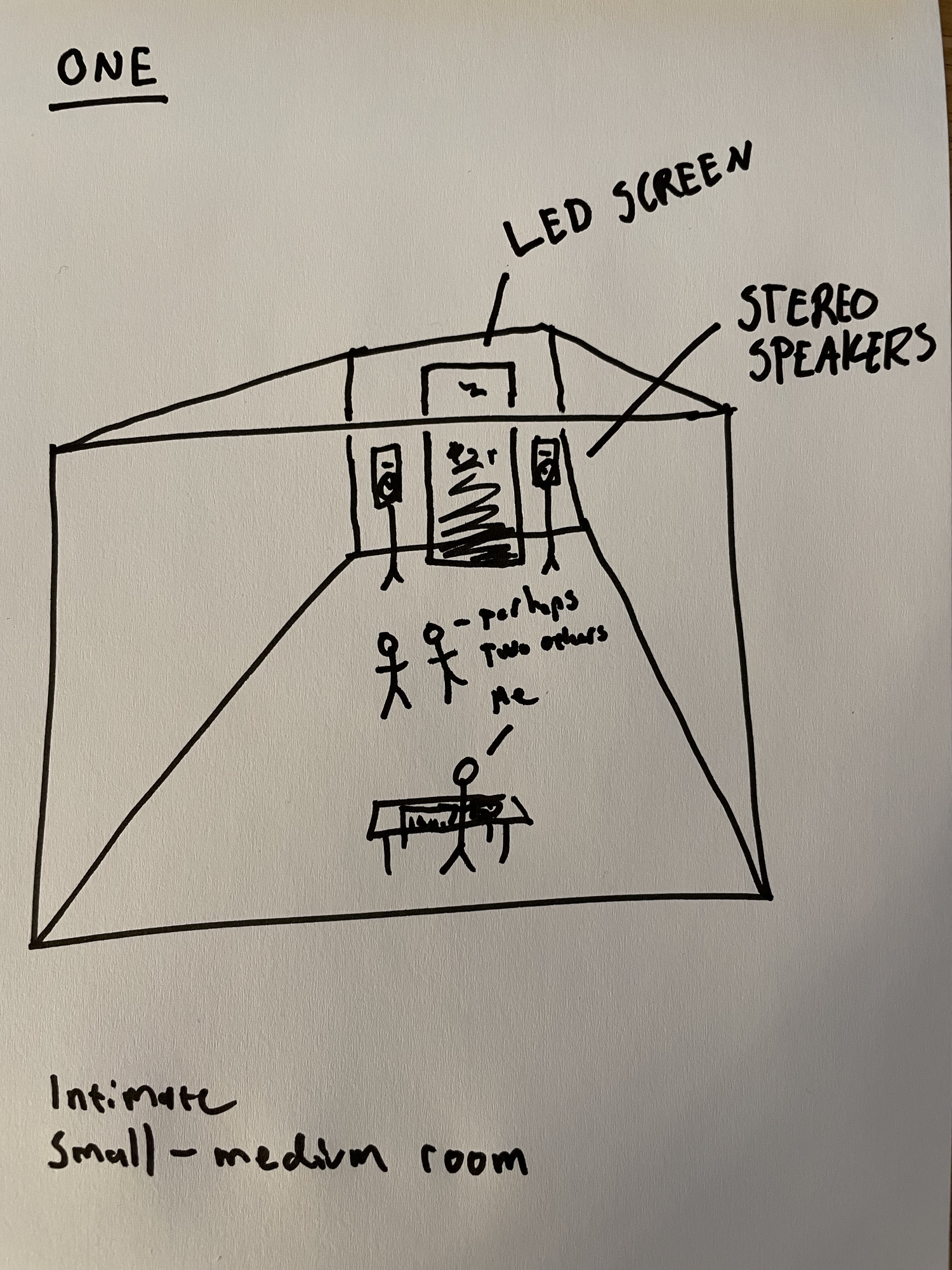

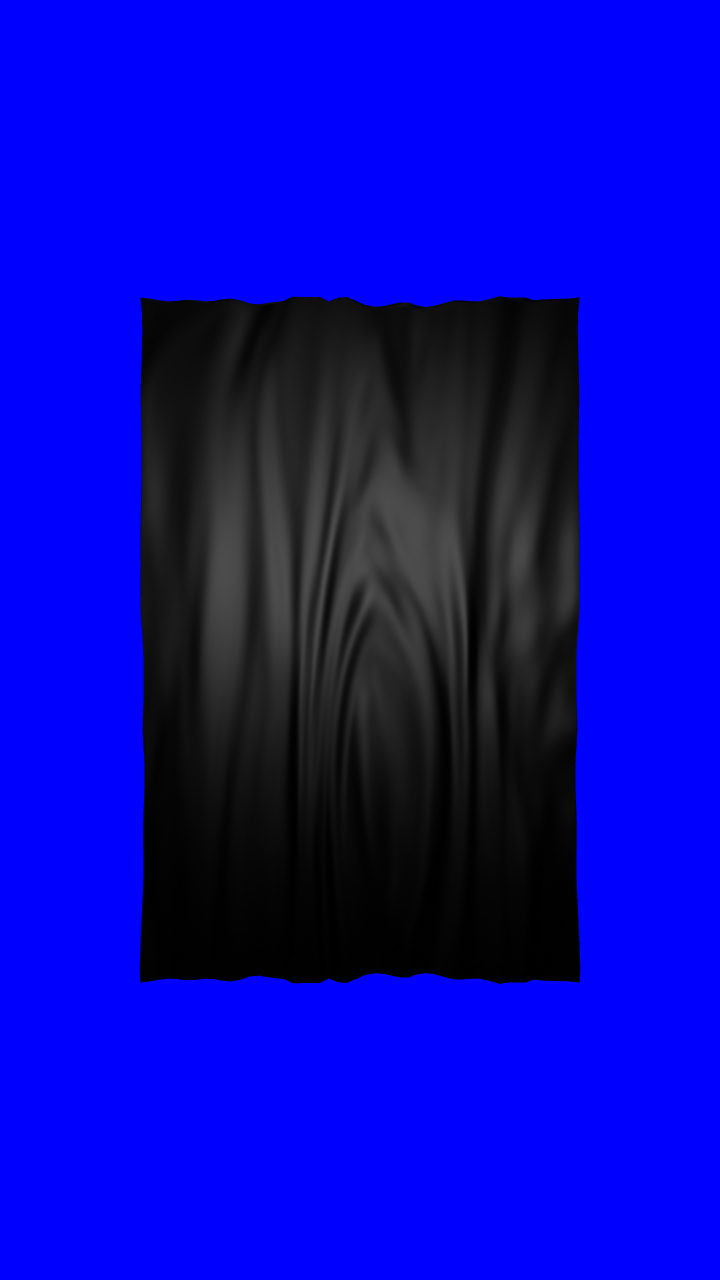

Hi everyone! In my project I am piecing together a generative audio-visual system. It is based on a modular synth setup in combination with the software Touchdesigner. The backbone of the system is clocked and controlled noise/randomness (both for visuals and audio). I use it to generate patterns that can be shaped, looped, and alter in different ways, or be left to flow as they arise.

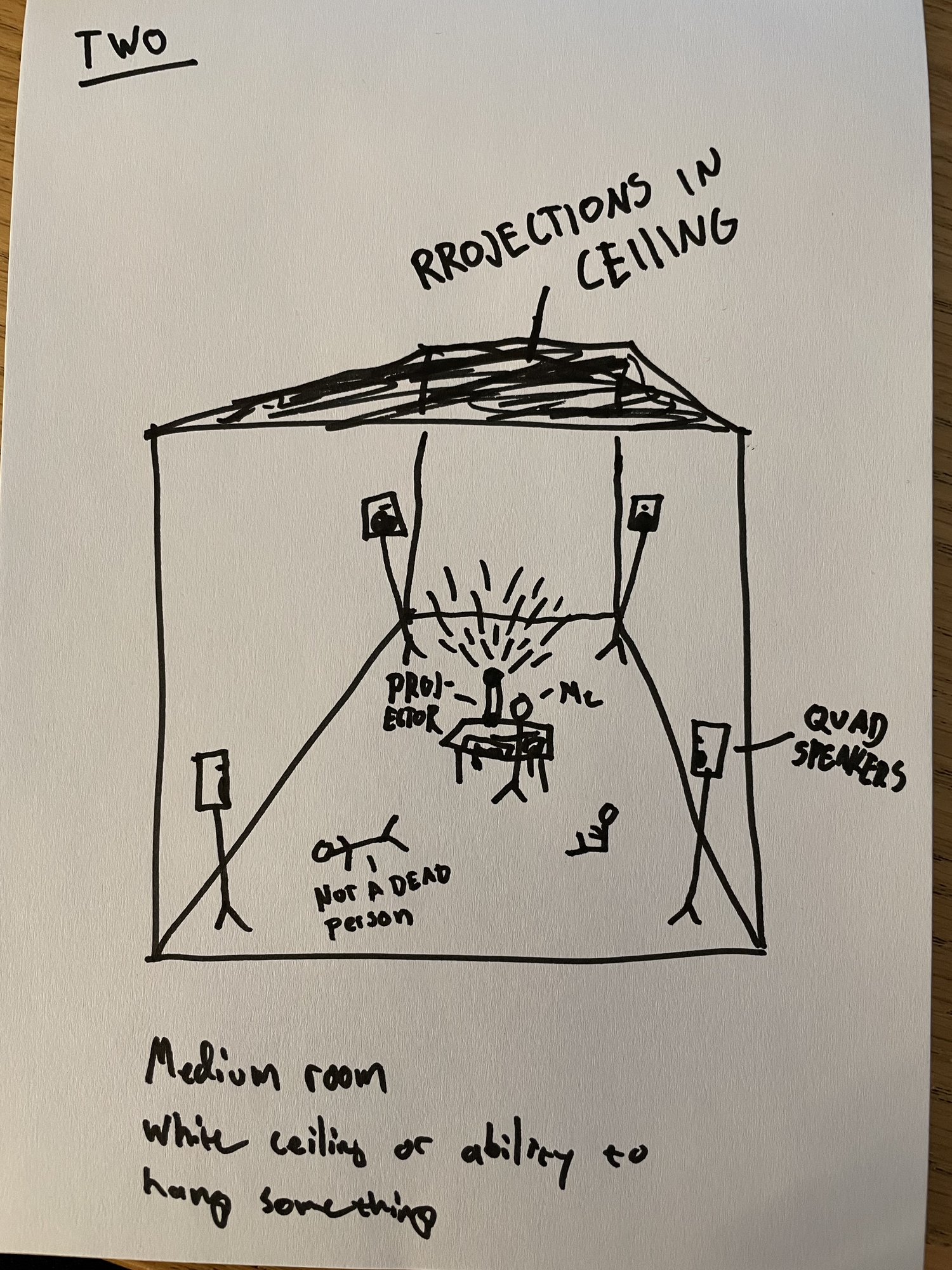

I am exploring ways to make the system interactive, so let us talk interactiveness, visuals in venues, placement, projections, and so on. How would you like to experience something in the lines of the clip? How do you invite to interaction without anything feeling forced?

Keyword: Temporal

New audiovisual inspiration:

Ko Hui

https://kohui.xyz/

T. Gowdy

https://t-g0wd-y.org/

Vincent Houze

My ambition going forward:

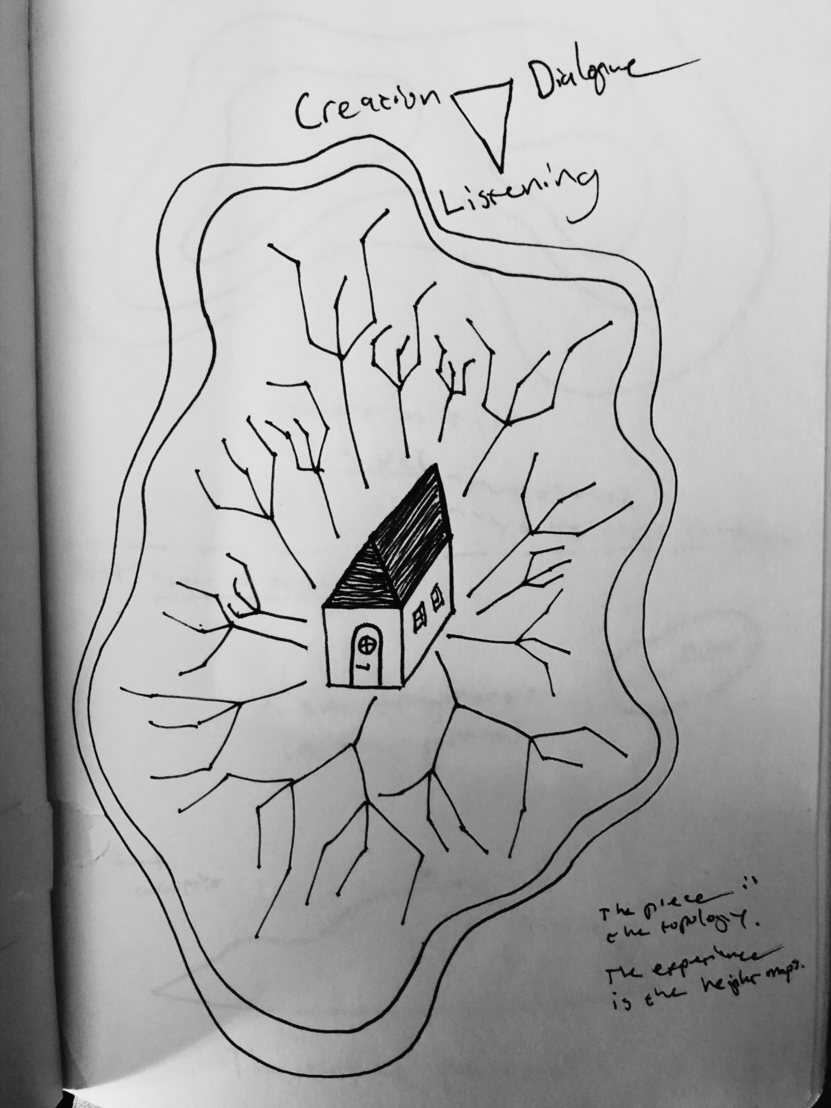

Concerts turned installations turned concerts...

A temporal conversation, some building blocks:

The music makes the visuals move. Motion in visuals opens up visuals and audative trajectories, branches out, lives on.

A bunch of words:

Real time composition/Improvisation, Temporal displacent, delay, granular processing. Cache. Frame rates, fragments, recent past, less recent past.

Time Machine TOP, Noise, chance, logic, comparators, rythm. Optical flow.

An exercise in agency

"Despite their obvious differences, what connects and equals humans and objects is the simple fact that they both influence others; they have agency. Agency is the capacity of an actant to change the environment. Through agency, humans and objects are able to interact." - Latour

The exercise

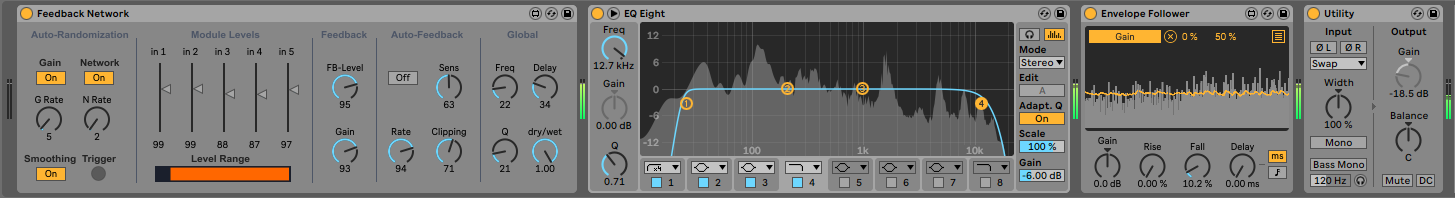

With limited prior knowledge improvise with two instances of the max for live device Feedback Network. Play the feedback patch as instrument inside a network of actants. - The idea was to create a head on, literal model of a musical improvisation seen as an actor-network.

The device: Feedback Network consists of five Feedback Units, each of which has an independent bandpass filter and delay line. The output from each Feedback Unit can be routed to any of the other five, at an independent volume - Cycling74

Actants in the network

H1 - Human Julius

H2 - Human Joakim

C1 - Midi-controller 1 (modwheel, pitchbend and slider on a M-audio keyboard)

C2 - Midi-controller 2 (three encoders on a Ableton Push1)

F1 - Feedback network 1

F2 - Feedback network 2

EF1 - Envelope follower 1 + amp

EF2 - Envelope follower 2 + amp

HP1 - Headphones 1

HP2 - Headphones 2

+++

"Anything that does modify a state of affairs by making a difference is an actant...

each actant is at the same time also a network." - Latour

Feedback network - The human designer of the device, the digital nature, the auto-randomization, the interface etc.

Human Julius (?)

Submilieus adapted to improvisation

The external milieu

Comprises the musicians’ surroundings, the venue or

studio, the acoustics of the room, the equipment, etc. Furthermore, it includes their

socio-cultural and concrete musical backgrounds.

The interior milieu

Refers to whatactually characterizes these musicians: musical experiences, playing techniques, personal idioms, and, more generally, their ways of being.

The intermediate milieu

regulates the transformations and exchanges between the inside and the outside world.Through new information, aural and visual stimulation, and the sensations these induce, musicians relate, to a greater or lesser degree, to their external environment.

The annexed milieu

a segment of the external milieu, the interior one establishes connections and energy exchange. Much of the operating power in improvisations is based on a more or less direct connection with external milieus, on an energy exchange between musicians, but also between musician and instrument, musician and acoustics, musician and cultural background,

- (Cobussen via Deleuze and Guattari in Costa)