{2022-03-22}

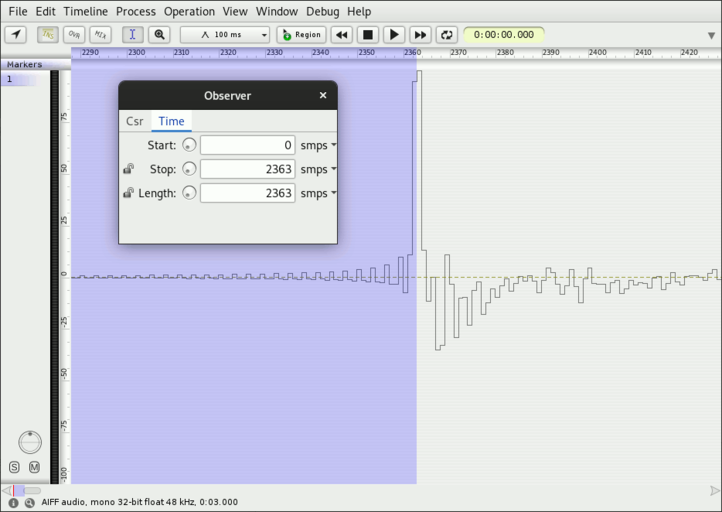

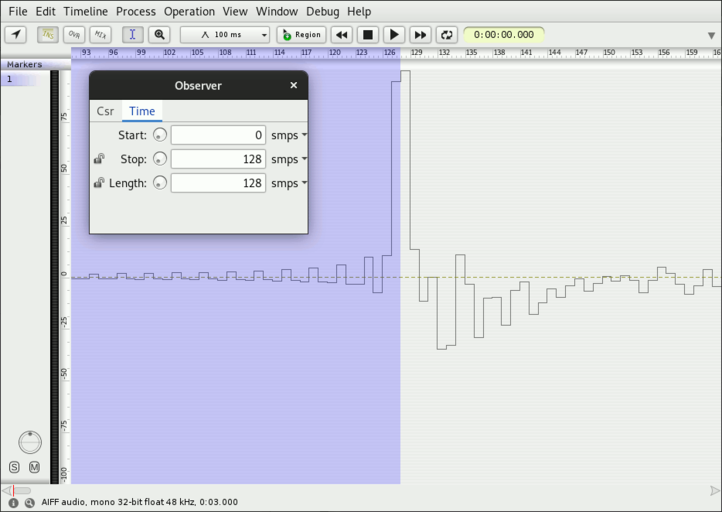

Quick (two seconds perhaps) IR scans, to determine the physical environment. Smooth (windowed max), centre clipping, determine local maxima, and repeat a few times to filter those that persist (within a few sample frames in terms of their location).

From those positions along with their strengths, define an “identity timbre” (it could be re-run after a while, or just when waking up).

{2022-03-23}

Simple code for finding distances in centimetres, and test code for merging two sets of measurements, finding “duplicate” occurrences under a given tolerance. Had to add the foldLeft operation to Lucre.

def mkIn() = AudioFileIn("in")

val in = mkIn()

val len = in.numFrames

val smooth = SlidingPercentile(in, len = 3, frac = 1) +

WhiteNoise(-60.dbAmp).take(len) // FScape issue 79

val diff = smooth.excess(-40.dbAmp).abs // in - smooth

val loc = DetectLocalMax(/* 0.0 +: */ diff, size = 32) // .tail

val locF = Frames(mkIn())

val frames = locF.filter(loc)

MkIntVector("pos", frames)

// two measurements, results in centimetres

val x1: Ex[Seq[Double]] = Seq(

0.7, 28.6, 55.7, 100.8, 134.3, 177.9, 207.9, 274.4, 420.9, 495.2, 527.4, 568.8, 652.4, 735.3, 860.4, 945.4, 985.4, 1020.4, 1106.9, 1387.0

)

val x2: Ex[Seq[Double]] = Seq(

0.7, 30.0, 55.0, 96.5, 119.3, 147.9, 179.4, 208.7, 274.4, 420.2, 495.2, 527.4, 568.8, 653.8, 734.6, 810.3, 860.4, 945.4, 984.7, 1020.4, 1107.6, 1387.7

)

val tolerance = 10.0 // cm

val groups = (x1 ++ x2).sorted.foldLeft(Seq.empty[Seq[Double]]) {

case (aggr, x) =>

val last = aggr.lastOption.getOrElse(Nil)

val merge = last.forall { y => (y absDif x) < tolerance }

If (merge) Then {

val init = aggr.dropRight(1) // init

init :+ (last :+ x)

} Else {

aggr :+ Seq(x)

}

}

val found = groups.filter(_.size > 1)

val mean = found.map(xs => xs.sum / xs.size) // .mean

Act(

PrintLn("Found " ++ mean.size.toStr ++ " stable positions:"),

PrintLn(mean.map(x => "%1.1f".format(x)).mkString(", "))

)

{2022-06-04}

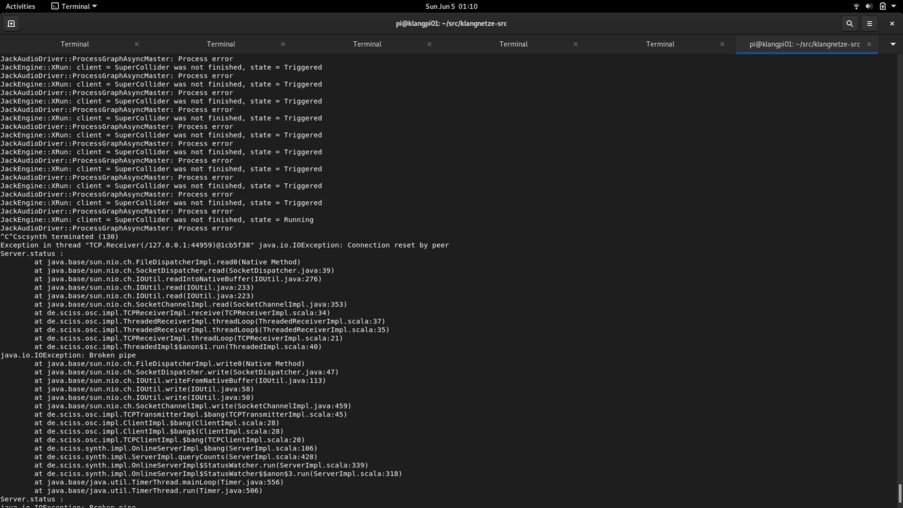

I’m giving up on the Pi Zero 2W. The machine is just constantly failing, crashing, hanging, the audio popping, the network becoming unresponsible. I suspect this is primarly a result of the poor decision to starve this computer with 512 MB of RAM ; I remember already the Pi 3 was running into bottlenecks due to its 1 GB RAM, but at least you could make it work. Here, no chance. That means, I certainly won't be able to run a SoundProcesses workspace that contains any FScape processing or Control objects. Any sort of multi-tasking (scsynth + X) causes massive problems.

That means, I have to rethink the piece in a very simple manner, something that runs within a single SynthDef basically. It's kind of sad because I thought the node-to-node communication could have been very interesting, but perhaps this remains an approach to be used elsewhere on a Pi 4 based project. I’m going to stop for today, after updating various libraries to avoid disk I/O, updates that are no longer useful as I will have to abandon SoundProcesses altogether.

I wonder if I should revert to creating a sort of multi-channel (but asynchronous) electroacoustic composition now for the network.

{2022-06-05}

It's possible to run the IR measurements with direct code, avoiding the Control/Ex code. Thus, I created a separate repository for the code. It took me a long time to figure out the idiosyncracies of the Pi's sound card. It seems it goes to “sleep” when there is no signal, even though SuperCollider and Jack are running. As a result, there are severe delays and gaps when beginning to play sounds. A workaround is to place a -70 dB noise signal on the line. There are still inexplicable variations in the latency between playing through the speaker and picking up the microphone, that should not happen, and that do not happen on the laptop. This makes comparison of recordings additionally hard.

{2022-06-06}

It seems to produce reasonable values standing at the outside wall (next to the door) of Reagenz:

Found 11 stable positions (cm):

68.3, 168.0, 279.1, 457.1, 595.7, 729.5, 850.1, 943.7, 1286.7, 1424.6, 1551.1

| | |

| | |

+-- direct +-- parked car +-- balcony

{2022-06-06}

I got to the bottom of the latency jitter problem. Apparently it has nothing to do with the sound card, but a bug in SuperCollider on the Pi Zero which leads to gaps in the start of the DiskIn UGen. There is a small chance this is a bug in SoundProcesses, although the OSC dumps indicate that I'm correctly waiting for the buffer synchronisation. In any case, a workaround is to load the impulse response buffer fully first and using PlayBuf instead of DiskIn. This also makes the background noise idea superfluous. With stable repetitions, I can now calibrate the latency of the system, and at least within my studio space, I get nice numbers. The center clipping threshold is automatically adjusted if there are too little or too many peaks.

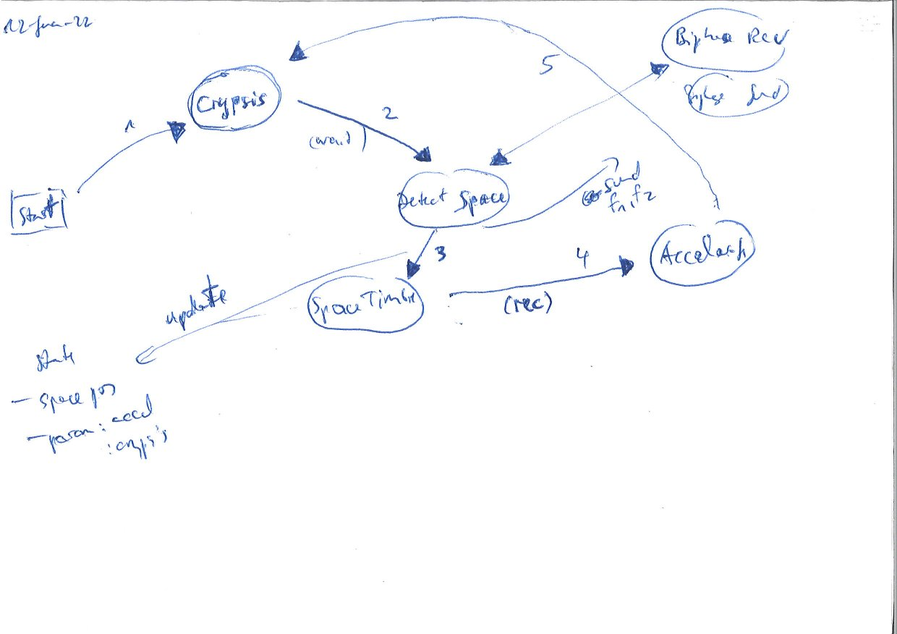

{2022-06-21}

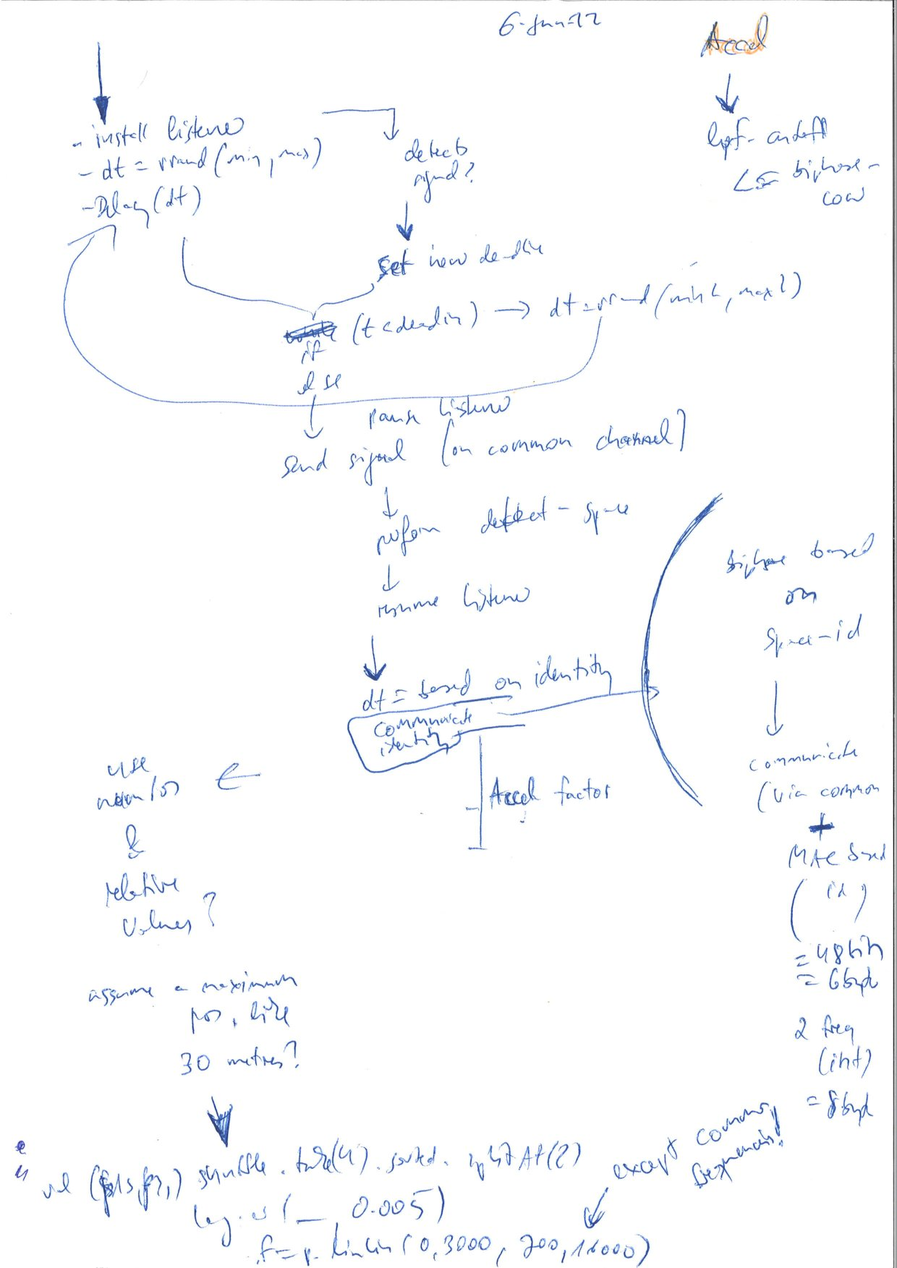

So that idea of the frequencies from the handwritten sketch is:

val (Seq(p1b, p2b), Seq(p1a, p2a)) = posCmSeq.shuffle.take(4).sorted.splitAt(2)

val f1a = p1a.linLin(spaceMinCm, spaceMaxCm, idMinFreq, idMaxFreq)

{2022-06-13}

A basic “machine” (state machine) loop is working now, although the accelerate algorithm is not yet integrated (because I think it should be always accumulating, but only playing when the corresponding stage is set). I wonder if I can thus run both the accelerate-rec algorithm and the biphase-dec algorithm all the time without overloading the Pi.

The next steps would be to anncounce the “individual communication frequencies” based on the space detection, and to listen to them from the other nodes (register the announced frequencies). The crypsis algorithm should sound more like breathing, i.e. a longer release and perhaps a resonance in the attack. The biphase-enc should probably also fade in and out with more idle bits.

{2022-06-19}

I am working on the refinements of the machine now, i.e. departing from the basic structure and working out parametric, sonic, algorithimc details, improving the compositional form. On my list of things still to do, is add silent stages to thin out the texture a bit, and commicating the individual frequencies (and possibly responding to them).

I slowed down the crypsis modulation frequency and adding a filter envelope, making it appear more as a sort of breathing. Also adjusting the pulse envelope, sometimes it seems to produce a slight feedback now, but I think I like it and leave it like this. Also very interesting is now how the crypsis picks up the space timbre from neighbouring nodes, which effectively adds a spatial pulse to them (the perceived sonic location shifts forth and back).