The Metaboliser is an artistic audio-visual work whose development was triggered by a reaction towards a certain way of conceiving of and operating computers common in many branches of digital arts. A conception which relegates these machines to the role of mere accomplishment of a predefined task, mostly that of generating a perceptible audio or visual form for some kind of data or of the ideas of the artists. That is, computers are considered as a means for finding a solution to a problem of representation of something external.

Implicit to this approach, there is an understanding of computers as a sort of neutral or transparent machines. Tools that do not interfere with what is represented and, most importantly, obey and closely follow the operator’s wishes and commands. Computers are required to perform their transformations without leaving a trace of their operation in the result.

I believe there is a similar notion in the relation scientists have with the highly technological experimental devices they use in some cases. Most notably at CERN, researchers build some of the most complex machines on earth in order to capture phenomena that otherwise could not be observed. They use all of their knowledge of the laws of physics to do this. But, when these machines have accomplished their recording task and the data of the phenomenon has been captured, something interesting happens to the experimental apparatus. In all subsequent analyses, it somehow “disappears” from the considered chain of operations that led to that very data. Therefore, there is nothing left anymore in between the data and the phenomenon: the data is a trace of “only” the phenomenon. What is observed and the result of the observation are in an isomorphic relation: the data is, in principle, the phenomenon.

Considering technological devices as tools that “dissolve” in the hand of those who operate them is a habitus deeply rooted in our society. In general, any specific material properties other than those of some sort of fetish are negated in technological devices, computers, smartphones, etc. These devices are required and expected to provide unbiased, globally valid “true” images and information to their users and therefore should not interfere or in any way “touch” that what just passes through them. If they would have any kind of materiality, material being something dirty (a consequence of the catholic indoctrination probably), it would undoubtedly “soil” the illusion of perfectly intact information they are providing.

This habitus, no doubt, serves to reinforce another deeply ingrained narrative: that of some romantic ideal of scientists and artists who think and work alone, removed from society, pure and cleaned of mundane influences. Their discoveries and their art being the product of their genius. In this figure, there is of course no place to acknowledge the effects of the material peculiarities of the tools involved in the process and their repercussions. Scientists have to face data that is “objective”. Artists realise ideas that are “perfect”, immaterial and ideal. Perfect, immaterial tools play the necessary counterpart to this Utopia: they are required in order to keep these works elevated from the earthly materiality. It seems anachronistic, but this kind of understanding of the scientists’ and artists’ work is, I believe, still the most diffused and, most importantly, it is the narrative that many scientists and artists employ towards the general public.

{function: Description, artwork: TheMataboliser}

There are certainly many good reasons why this narrative is still so strong (I suspect mainly tied to funding strategies and criteria), but my interest lies in unveiling the consequences of this kind of thinking. In particular, I would like to report on some thoughts some physicists are beginning to formulate, especially in highly specialised fields as particle physics. Contrary to the, maybe a bit overdrawn, situation described above, researchers are developing an awareness of a dramatic effect at the core of their experimental practice: they can observe only what they want to see. Their experimental apparatus is so specialised and imbued with the physics that is known, that all that what is not known, the uncharted spaces of physics, are necessarily filtered out. In other words, physicists are realising that the machines they construct are not able to see phenomena that lie outside the spectrum of the possibilities they already know of. Their technological devices, affected in their construction by current theories as well as the employed analysis methods, are strongly deforming and, most importantly, limiting their observation capacities.

I believe that the source of this limitation is not to be found in the technological device per se. On the contrary, its origin lies at the core of the understanding of technological devices as immaterial, perfect manipulators and transparent displays: in pursuing an image of tools under the unconditional and total control, this limitation reflects back on the users. It is not only that the computer is limited in its operation to an ideal “pure” machine: the users themselves are similarly limited to use and conceive of computers in such a way, in order not to have to question their immateriality. In a classic second-order cybernetic inversion, in which the controller becomes equally controlled, the ideal of control is the origin of limitation, the ideal of immaterial technology becomes the source of limitation for digital technologies.

Stripping computers of the ideal of immaterial transformators, means not only to liberate them from the duties of transparent re-presenters, but also to emancipate their users from the boundaries of a thinking constrained by the norms of neutrality, perfection and transparency. Criteria which are external to the work itself, predetermined and applied a priori. Restoring a material quality to computers means acknowledging that their operation is rooted in the reality they are embedded in and in the interaction with who operates them, or more dramatically, in their entanglement; and that this entanglement is local and specific to the particular configuration of actors in it rather then globally valid; that it necessitates the material action that two agents perform on each other; that admits the reality of processes of approximation, deviation and error as not only inherent in such an interaction, but as its essential generative core.

{function: Description, artwork: TheMataboliser}

Moving towards a materiality of computation can be understood as a way to open spaces of possibility and experimentation, especially in the arts. In my understanding, this movement is strongly connected with a de-idealisation of the artist’s figure and role as a singular actor, somehow removed from his or her context. At the core of this thinking is a perspective where “things” (agents or objects) are always in some sort of interaction with each other, that this interaction leaves traces on them and that these interactions form entanglements which cannot be extricated. Eventually, the artist, his or her tools, their social context etc. all form a network of interconnected actors, all interacting and leaving marks on each other.

The Metaboliser is an artistic work that embodies the ideas of networks and entanglement in different ways. It is a computational artefact that does not have a given solution to achieve: once it is started, it is a potentially endless process. Its temporal evolution is its primary sensible dimension: that is, rather than resulting in some static object which could be experienced as a singular object, the metaboliser continuously produces changing visual and auditory forms that cannot be extracted from their temporal sequence: a “snapshot” of its evolution cannot be extracted from the interconnection of its past and its future. The experience of the unfolding and development of its behaviour, the particular way in which subsequent states relate to each other, this is the mode of perception the work addresses.

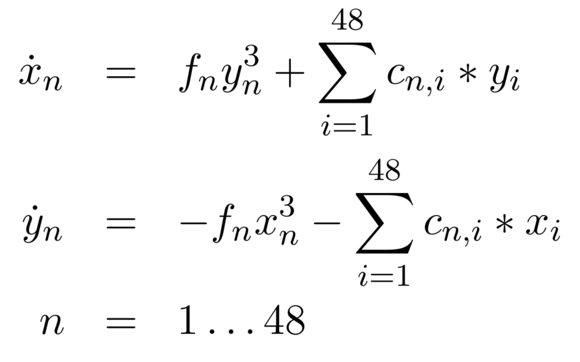

More in detail, the work consists of the simulation (technically integration) of the dynamical system of a network of 48 interacting non-linear oscillators. In mathematical terms such systems are formulated as a set of equations or rules describing how the system evolves from one moment to the next. In these terms, the metaboliser system could be rewritten as:

Where the 48×48 matrix cij contains the coupling strengths between each oscillator pair and the factor fn is the frequency of the n-th oscillator. These systems are usually simulated using a recursive algorithmic scheme that consists in the re-iterated application of the above rules on successive systems states. That is, given the system’s state at a specific time, the application of the rules of evolution results in a new, evolved, state at a later time; then again, applying the same rules to this later state will give the next and so on.

The metaboliser does not just develop on its own, it is also influenced by the input it receives. This input can be any kind of data. The metaboliser does not make any difference with respect to its origin, their supposed meaning or interpretations. It just takes the set of numbers it is presented with and “digests” it: this process of “metabolism” results in modification of the frequency and coupling factors of some elements in the system, depending on the characteristics of the data set, i.e. how many elements it contains and their value. Thus, the metaboliser takes in and absorbs the data in its very structure: it is modified by it and its future evolution will be affected by every data set that “sediments” into it. In the digestion process the input data completely disappears as a recognisable entity; there is no way to take it out from the system in any way, once it is injected into it. No isomorphic relation exists between the output the metaboliser produces visually and auditorily, and its input. It is not an analysis tool that finds and displays some qualities of its input. Any specific form it will produce will be strongly determined by its past inputs. The data will become part of its behaviour and radically entangled within the metaboliser’s systems.

The images and the video you see in this pages are selected details of some visual traces of the metaboliser’s process: the digested data is data from recorded particle collisions at CERN, data of the simulation of neural networks, genome folding interaction data, and astrophysical data of the matter distribution in the universe.

{function: Description, artwork: TheMataboliser}