Absurd (derived from surdus, or deaf, in Latin): that which cannot be heard or is contradictory to reason.

What if we applied the principles behind conspiracy theories — absurd premises followed by logical developments — to sound art?

First questions, first answers: how to document sound art?

Documenting most sound installations is a problem. Jacob Remin’s art installation Harvesting the Rare Earth, exhibited at Overgaden Institute of Contemporary Art[2] in Copenhagen, for which the music and sound parts were created in collaboration with sound artist Runar Magnusson, had all the documentation one can hope for: an article, pictures, movies, and recordings of the whole process, including a conference and the final concert for the worms. Still, none of those combined elements could give a complete impression of the work, which should still be experienced in person as it consisted of several pieces mixing naturally inside a given, rather neutral space.

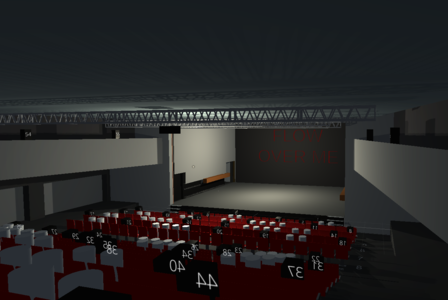

One resolution could then be to reconstruct the exhibition in virtual reality, using a videogame engine. Of course, it would remove the physical presence of the worms we worked with, but at least it would connect the rooms together sound-wise and let the visitor enjoy an almost complete experience. The conducted experiment showed realistic enough acoustic behaviour, and was therefore a success, even though the room was simplified in many ways. It pushed for ulterior developments as a way of archiving, but also of prototyping future installations: what if we could develop projects just as well in the real world as in the virtual one, solely depending on the contingencies of the project? And could we then switch from one to the other at will?

Intermediate associations: mixing the virtual into the real

Let’s now create a virtual world anchored in the real, without the use of any glasses, headphones or trackers attached to the audience, for one person at a time in a large room. Hybrid Hall was created at The Danish National School of Performing Arts in June 2018, as visual artist Carl Emil Carlsen projected two simultaneous windows on the walls, allowing access to another world teeming with life. Perspectives would vary depending on the placement of the audience member within the room (taken from a combined set of cameras), meaning that person (and only that person) would always see things in correct alignment. Sound-wise, the same audience placement would generate a combination of movements and musical elements moving into a three-dimensional space with an equivalent perspective.

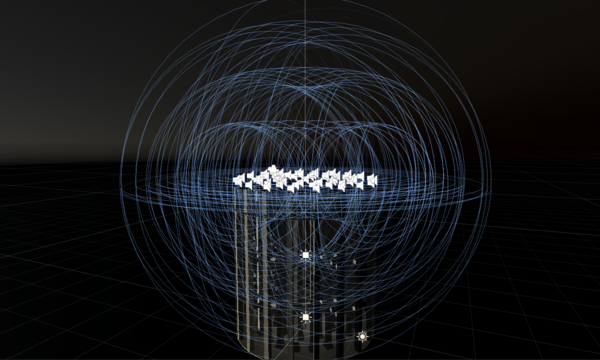

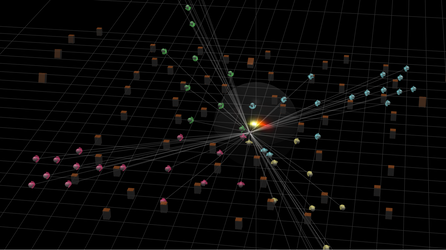

To achieve this, 16 Meyer loudspeakers were placed in a production room, allowing us to geometrically map both the floor and the ceiling while creating a virtual cube. Apart from dealing with the real-time complexity of accurate spatial representation within such a cube, the project had an extra goal: to recreate the room virtually, again using a game engine, and test possible equivalences in terms of sound. This highly technical approach brought several conclusive results within a single week: the whole operation, although not the first of its kind, did offer advanced possibilities regarding the use of virtual reality in the real world. Yet the real breakthrough came from modelling the room, which showed very convincing results by sending the same sounds with a one-to-one scale into their 16 virtual loudspeaker counterparts. Even downmixed to binaural stereo for headphones listening, virtual reality becomes then a potential replacement to the complex real world as far as sound goes, blurring the lines as we now aim at prototyping directly within the virtual world.

Problems related to the experiment: mixing the real into the virtual

In Harvesting the Rare Sounds, we are dealing with four non-conflicting pieces of sound art, which blend rather naturally as distantly located sound sources in our virtual space. This is mostly how game engines function, applying acoustic rules to virtually located sounds and playing the result at the listener’s position.

In Hybrid Hall, on the contrary, there is no located sound source: all locations are phantom, meaning our brain now estimates the position of sound objects depending on how the many speakers perform them. This is, for instance, the way surround sound works in films. The novelty here lies in the virtual engine’s surprisingly convincing performance, as the blending of 16 constantly playing virtual loudspeakers creates a realistic surround experience.

A question arises, following our previous work with invisible choreography[3]: what if we transposed a recorded space into a virtual one, not from an anthropocentric point of view (which is typical of ambisonic recordings[4] and already widely integrated in virtual reality) but from a room’s point of view? There, the original omnidirectional microphone set-up (the ‘module’) would have to become a virtual loudspeaker set-up: again, without located sound sources, but rather virtual speakers performing the sound captured by real microphones in order to transpose the real world, its rolling marbles, and its acoustics, into the virtual one. A window on the real, all on a one-to-one scale.

What we lose: there is an inherent lack of dynamic possibilities, as one cannot bring new elements at will in such a recorded environment. The work has to be thought through beforehand — hence the name of the technique, associated with choreography. Also, our game engine will eventually model all sounds into space from the listener’s perspective, which returns us to anthropocentrism.

One of the main principles of virtual reality was suddenly reversed. In a game environment, we would usually use many sound sources (marbles for instance), which we would place and move independently in the universe. We might also add reverberation or filtering effects when needed, in order to convey the feeling of certain circumstances (we enter a church, or we go behind a wall). The game engine would then calculate the rest. In full contrast, here the sound sources don’t exist anymore: instead, we use virtual loudspeakers recreating a 3D space in which the sound sources, but also the real acoustics, occlusion, and so on are included by design. The combination of loudspeakers simply recreates the space from as many points of view as there were microphones during the recordings, as we move into what seems to be a naturally occurring acoustic mix of the whole, each loudspeaker playing its own version of the space. One could also see such a system as an exploded version of the ambisonic microphone described earlier, one that takes space into account instead of a human point of view.

A final point: this set-up is horizontal (grounded to the floor) and therefore ignores vertical movements. But, theoretically, a full 3D space could be achieved using more microphones, speakers, and additional audio files, as the Hybrid Hall experiment already proved.

Link to the expanded field: a cognitive loss

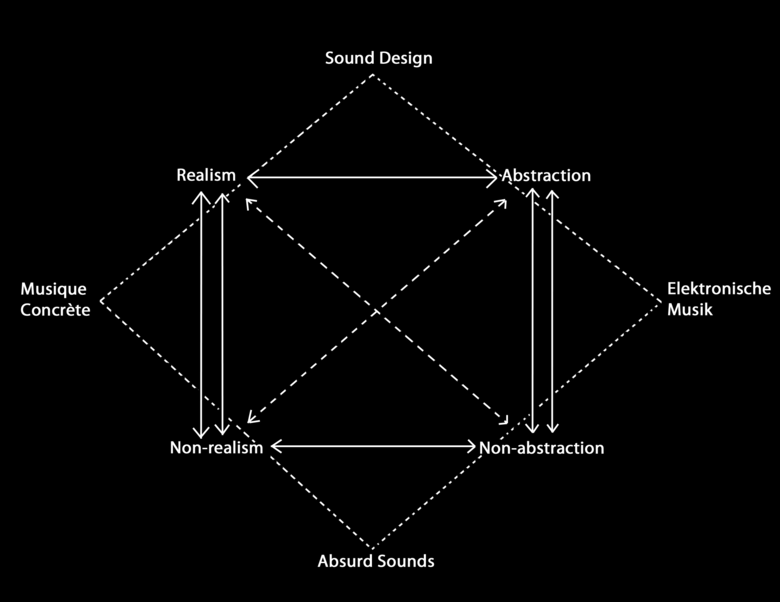

We refer to the ‘Sonic dramaturgy in the expanded field’ diagram, as described in the two first examples of this research, in order to understand which kind of manipulation we are operating towards our audience while creating a virtual environment.

When the physical appearance of the invisible choreography dims, as explained by Michel Chion,[5] in most cases test listeners tend to make associations with concrete stereotypes instead of letting the sound itself (or the room) convey its own message. The simple fact that the object was not there has a profound impact on its meaning. Also, the eventual use of electroacoustic composition tools will help to make any realistic sound left become non-realistic when needed.

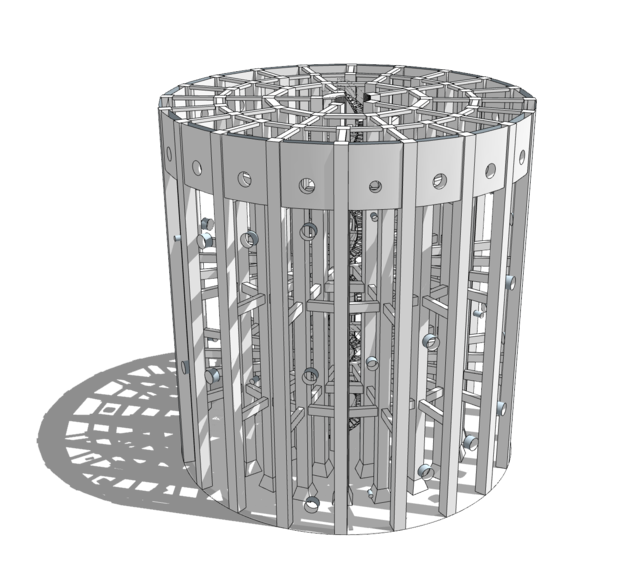

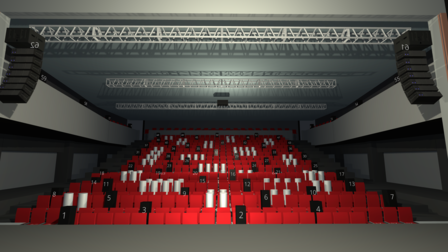

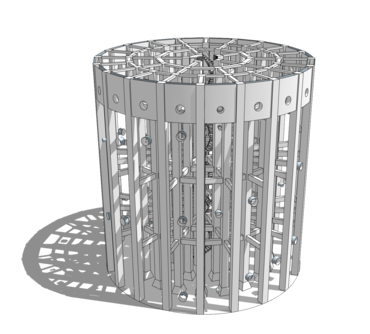

Because of these complications, and because composition for so many loudspeakers in such a large room can only be conducted in the room itself, prototyping the room while simulating its acoustics and placement in the virtual world was a rational choice. This massive work included a physical and a 3D model, as well as a calibrated spatialiser/sound card system, before finally getting to the complete virtual version. The sound flow was developed on three parallel processes: a prototype version for composition with 64 virtual loudspeakers and a pair of real headphones; a combination of software, programmed to perform live and spatially on 64 outputs and 120 real loudspeakers; and finally, a real-world work in the room, calibrating each individual speaker depending on the sounds they produce from various points of view.

The task was huge, and required heavy preparation time. Still, everything ran smoothly and once the speakers were set and the technical problems solved (ground loops, defective speakers, faulty cables, and wrong configurations), it was possible to work alone in the room for nearly two full days before an audience would show up.

New questions: virtual complex acoustics

Admittedly, prototyping complex productions can be a tremendous help as far as composition and performance go. Yet we need to push further regarding acoustics, as we have mostly worked with rather neutral spaces so far. What if the same approach were tested in a way more coloured place? In March 2019, an installation, a soft fall, was created with Icelandic sound artist Runar Magnusson, again inside Brønshøj’s fantastic water tower.[7] The prototyping began early on, based on the original architectural plans, but the complex, intense, and diffuse acoustics of the tower could simply never be rendered with the rather simple systems available on the game engine market. These mostly work in square environments, treating visual obstacles such as a concrete column as something that mostly blocks the direct source of sound, therefore widely ignoring its actual massive reflection potential.

The original prototype became dependant on acoustic capture, using convolution reverb.[8] A problem, though: the acoustics and reverberation change as we move, meaning we should crossfade between many convoluted reverbs to avoid static sound and achieve realistic results. This is technologically challenging, as both the measurements and computing needs would be tremendous and the result might not meet expectations.

For once, virtual reality seemed to fail us when facing truly complex acoustics. But such failure, in an absurd system, suggests that the chosen angle was not the right one. The virtual reality component of the installation was therefore pushed towards the final stage of the project, as can be seen in the description below.

Artistic development: a bridge between realities

The virtual model was not perfected until long after the installation opened, with the intent to be used as documentation for a soft fall, as a first step towards a sound art virtual gallery for other artists and as a possible means to recreate the now-virtual tower somewhere else, in the real world. A series of towers might ensue, providing this one showed convincing sound quality.

In complex acoustic environments, a virtual prototype may not be sufficient for compositional purposes, yet it can still perfectly document the work at the end of such a project. The tower remains long after the installation was dismantled but, beyond this, it can even be recomposed in the real world if need be, without the tower itself.

Indeed, a specific feature of this recording technique is that it carries the room acoustics, so the resulting recordings can also be performed again in reality. This means that a set of up to 14 speakers (the more the better, but if placed correctly any number of them will reconstitute the space) will provide a similar acoustic experience to the one the tower created with us. Providing, of course, the new space used has significantly less reverberation time, as an augmented density would absorb the sounds produced. If these conditions are met, any audience member situated within the newly created loudspeaker set-up will experience the tower as if being inside of it — even if outdoors.

Writer and director Alejandro Jodorowski, in Le Théâtre de la Guérison,[9] speculates that he who controls his dreams, controls his reality. This is highly relevant here, except the dreams might be replaced by virtual reality. This whole research calls for going beyond the possible, for radically new processes, as we are given the opportunity to create new realities from the virtual world, and then transpose them into our own…

… creating what one might call real virtualities.

But why use virtual reality again? Once finished, the model allowed one to listen to a sound simulation of each composition from every single point of view in the room before even entering the real production. Working directly within Pro Tools and SPAT Revolution, for instance, makes it possible to hear a binaural result at any time, but moving dynamically inside the room makes a huge difference, both for the composer and, due to the ease of sharing the process, for the people working on the production, too.

Experience it for yourself and download Edition#3 — spaces as an app for Mac (803 MB) and PC (744 MB) here.

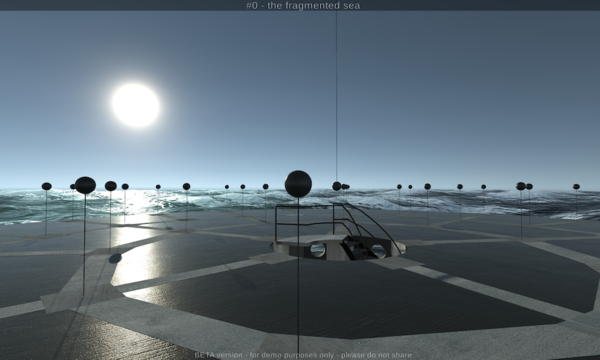

Located on the rooftop of the water tower, it involves 51 loudspeakers and performs a spectral spatialisation of a recording of the Danish West Coast sea. Each loudspeaker plays a piece of the sea’s frequency spectrum, but the sound characteristics of the speakers have been modified in the virtual space. They therefore don't allow the wandering listener to hear more than a few of them at a time, so you create your own mix depending on where you walk. Up there the sea is agitated, while it is calmer at the bottom of the tower.

Experience it for yourself and download

Harvesting the Rare Sounds

as an app for Mac (454 MB) and PC (453 MB) here.

Experience it for yourself and download Invisible Marbles as an app for Mac (65 MB) and PC (54 MB) here.

Original description of the installation, a soft fall

Brønshøj Vandtårnet, or Brønshøj Water Tower, is a special place when it comes to acoustics. With its fantastic reverberation time and its extreme differences in reaction depending on frequencies, the tower is so specific in the way it sounds that in most cases it ends up playing the musicians who attempt to play it. After exploring the depths of the water tower, Edition#, a sound art duo consisting of Runar Magnusson and Yann Coppier, attempted to reveal the space to its listeners. Together they created a non-intrusive and warm room in the massive concrete, reverberant structure, filtering out the sounds from the outside while exploring the foundations of what makes this alien yet functional piece of architecture a unique place to experience using one’s ears. A soft fall was performed on ten loudspeakers, in a place where the sound constantly plays with its own shadow, painting the walls with swirling sound while bursts of noise and percussive elements reveal the room like lightnings in total darkness.

The exploration consists of three parts: an installation to set the fundaments for a new world, a concert to bring new life to it, and a virtual release to keep it alive in a parallel universe.

It is now time to prototype directly in the virtual world. For the presentation of Who’s Speaking?, an experiment conducted with 120 loudspeakers,[6] the main concert hall at the Culture Yard in Helsingør was available for seven days altogether, including a different experiment on each of the four days of presentation to an audience. Installing 120 loudspeakers and calibrating 64 outputs would therefore leave very little space for composition, if any.

Also, providing everything functioned as intended, any composition made prior to getting in the room would most likely face challenges while being transferred into such a complex system — unless, that is, it had been made following existing and well-documented traditions (acousmatic, ambisonic, wavefield synthesis), which was clearly not the point.

A final touch was added to the virtual tower: it is now surrounded by the sea, and includes two installations. The first is the prolongation of a soft fall in the virtual space, as described earlier, and is depicted on the floor. The second is an evolution of the moving sea, a piece created for Who's speaking?, using Xavier Descarpentries' spatial effect.

The recording of the sound installation was again made using the ‘invisible choreography’ system, here using 14 matched omnidirectional L.O.M. microphones. The microphones were placed one metre away from the loudspeakers, in between columns, and extra ones were used in the centre of the room and up the stairs. This means that the ‘useful room’, as defined by the circle of microphones, is smaller than the original room as defined by the loudspeakers. This is a paradox, as the microphones become the sound sources once we recreate the installation, meaning they are played back via virtual speakers that are not placed like the original speakers.

Virtual reality, or VR, is a simulated experience that can be similar to or completely different from the real world. Standard virtual reality systems often use either virtual reality headsets or multi-directional projected environments to generate realistic images, sounds, and other sensations that simulate a user's physical presence in a virtual environment. A person using virtual reality equipment is able to look around the artificial world, move around in it, and interact with virtual features or items. Virtual reality typically incorporates auditory and video feedback, but may also allow other types of sensory and force feedback through haptic technology.

To be noted, the term ‘virtual reality’ was first coined to describe theatre, as French avant-garde playwright Antonin Artaud pointed to the illusory nature of characters and objects in the theatre as ‘la réalité virtuelle’ in a collection of essays, Le Théâtre et son double.[1] It has therefore a strong relation to performing arts, and maybe even a strong potential as a performing art.

This time, the change seems to occur due to cognitive loss. Should the speakers be visible in the virtual space (as they mostly have been so far), then we would know where the sound comes from as we recreate the real world. But just as light does not need any visible source in a virtual space, the speakers are bound to disappear. This is no ordinary step, though: as the theory of absurd sounds proposes, any audience first needs realism and/or abstraction to initially establish a context they can relate to, before jumping into more problematic contexts.

And, indeed, the visual loss of loudspeakers brings us straight from realistic to non-realistic sounds. By removing not only the object that originally generated the sound (e.g. the marble), but even the one that was supposed to recreate it (the loudspeaker), we put the listeners into a problematic position as they were forced into guessing what such a three-dimensional performed abstraction represents.

To the left: a balloon is popped to capture the acoustics through the process of impulse response.

To the right: the acoustics is tested live through a screaming contest, which demonstrates the issues at stake in such a space.

What we gain: this strategy requires very little processing (all calculations about acoustics, placement, and such are irrelevant, as we are simply playing audio files) and the realism, if not perfect, is striking from all points of view at once. Also, more listeners in the virtual room get different points of view at little additional cost (the speakers play the same audio files in every case; it is the listener’s position in space that creates the acoustic mix).

In the end, the difference between reality and virtual reality seems to be a matter of definition and precision, regarding sound at least. It was indeed possible to make a virtual installation come to life in the real world, just as we had been able to prolong the existence of a real installation in the virtual world. Such a statement would require more experimentation, though, with a less rigid format than the one imposed by yet another black box, such as a concert hall.