{hhr, 11-may-2018}

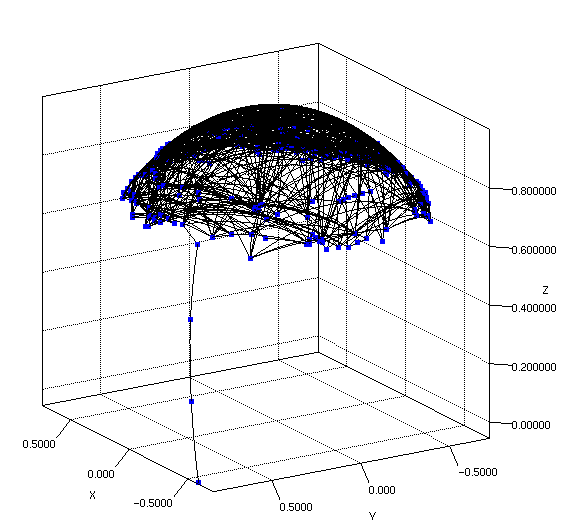

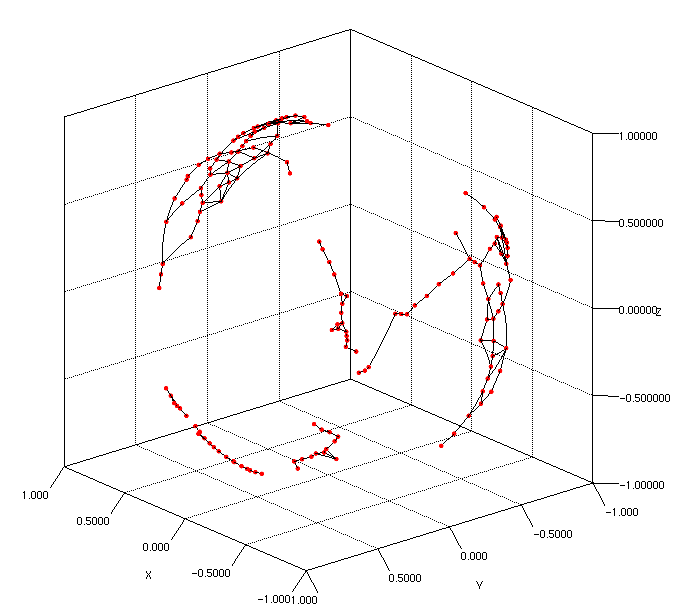

if the five sensors "emanate" from the body, could their sensing be understood as a spherical hull around the body? Can they provide for a 1/5th of a spherical space in which the GNG dwells? (Can we reformulate the GNG in terms of spherical coordinates, then)

So what's the shape of the 1/5th of a sphere? Can we place N points with maximum distance to one another, and then produce a Voronoi around them? (And consequently, how can we project the analysis data onto this area)

- http://people.sc.fsu.edu/~jburkardt/m_src/sphere_voronoi/sphere_voronoi.html

- http://maths-people.anu.edu.au/~leopardi/Macquarie-sphere-talk.pdf

- https://de.mathworks.com/matlabcentral/answers/323426-divide-a-sphere-surface-into-equal-ares

{hhr, 28-may-2018}

Got the GNG basically working with spherical coordinates. Something is still not right about them, as they cling only to certain areas.

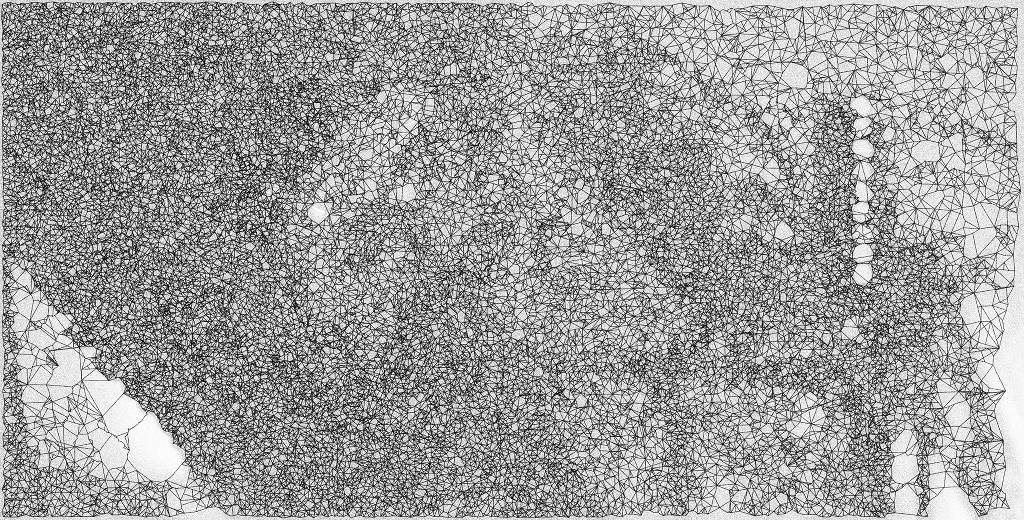

Neural Gas is a metaphor used by computer scientists (Thomas Martinetz 1991, extended by Bernd Fritzke 1995) to describe algorithms (inspired by neural networks) that perform an unsupervised process of "learning" an unknown topology by forming an adpative network of nodes. The network expands (in GNG - growing neural gas) like a gas expands in a volume, giving name to the algorithm.

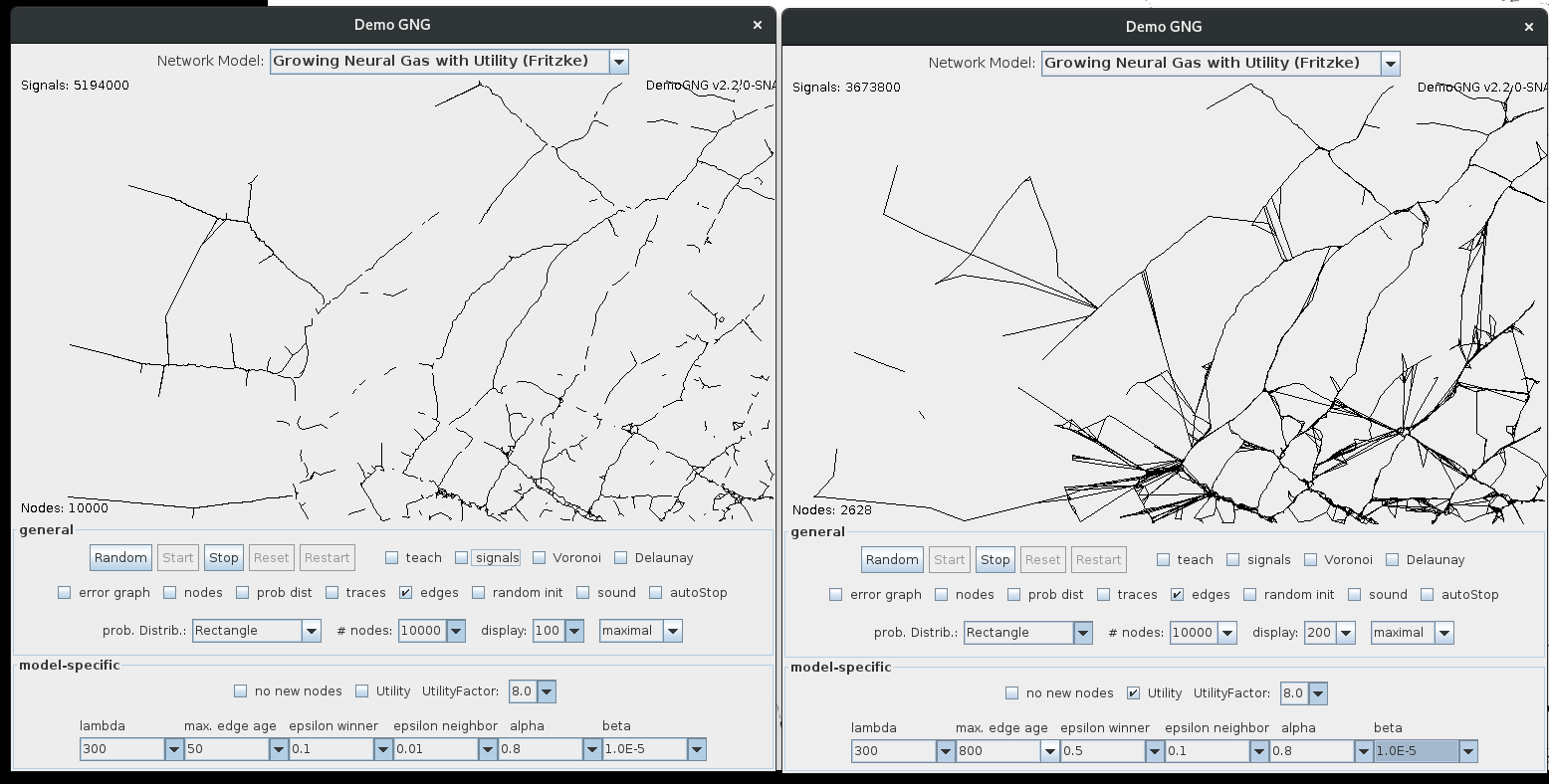

We are currently interested in exploring the aesthetic properties of this algorithm, and experimenting with its modification. One feature is that under certain parametrisation, there is no parameter that needs to be "narrowed" down over time, meaning there is no predefined learning period, but the process is actually continuing ad infinitum, and keeps adapting to a changing environment (probability distribution), making it perhaps suitable for an ecological component in a sound installation context.

The algorithm is also, in the 2D case, easily "perceivable" in the visual domain, connecting to ideas such as Voronoi tesselation, Delauney triangulation, etc. One interesting question is if we can move from there to a more abstract domain, and what happens then?