{kind: footer}

The iteration with Jonathan Reus was embedded in Algorithms that Matter (ALMAT). ALMAT is a three-year project running from 2017 to 2020, within the framework of the Austrian Science Fund (FWF) – PEEK AR 403-GBL – and funded by the Austrian National Foundation for Research, Technology and Development (FTE) and by the State of Styria. It is hosted by the Institute of Electronic Music and Acoustics (IEM) at the University of Music and Performing Arts Graz.

Almat's methodology is based on iterative reconfiguration. A configuration encompasses the members of the team, Hanns Holger Rutz and David Pirrò, an invited guest artist along with a proposal within which she or he works, a "machinery", including two software systems developed by Rutz (SoundProcesses) and Pirrò (rattle), and possibly more systems brought into the experiment by the guest artists, which we aim to couple and to explore from different perspectives. An iteration takes the form of an online preparation phase, in which the core team and the guest artist engage in a dialogue about their practice and their relation to Almat, as well as the specific preparation of a two months residency period, in which algorithmic sound studies are developed in situ at the IEM in Graz.

The third iteration is conducted with Dutch-American sound artist and music-technology researcher Jonathan Reus. This research catalogue entry documents our work process.

{keywords: [_, ALMAT, methodology]}

Musical background

The performative dimension of music plays an important role in Jonathan's artistic pracitce. He got serious about music making in Northern Florida as part of a musical culture connected to the folk traditions of the southern Appalachian Mountains, where "music is traditionally a lived art [...], it is played in day-to-day situations and has some sort of role in daily life [1]". Despite having invested the past decade developing an electronic art and music practice, he carries with him this way of playing and way of thinking about music. He describes his approach to electronic music as follows: "My point of interest when working on new music pieces usually involves an interest in the idiosyncrasies of a technological object or technique that encounters me. It's usually about asking how do we play these things. What is the role of the objects that create these sounds in my daily life? [1]".

Computers

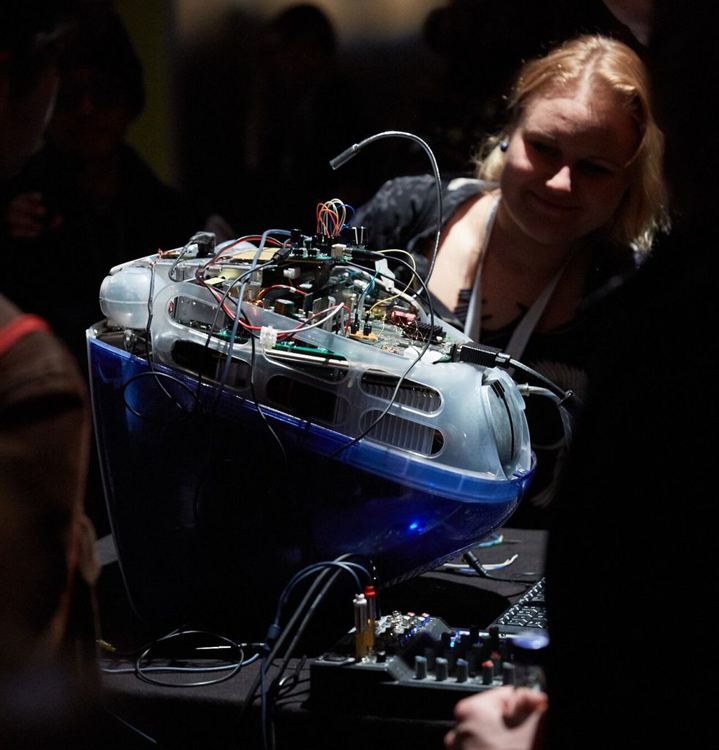

Having worked as a software engineer for a number of years, computing and software languages compromised both aspects of daily life and found their way to be a fundamental part of his musical practice. One representative example of how he approaches these machines artistically is his series of performances with obsolete Apple computers, exemplified by iMac Music, a performance where he turns old iMac G3 computers into musical instruments. There is a long process of going into the electronics, transforming the machine into something that can be physically played. As Jonathan explains: "You can think of it a bit as a preparation you might do on a guitar or a piano, developing specific hardware and techniques to turn this kind of live circuit system into something you can perform with and talk to [1]".

Live coding

Another form of interaction Jonathan developed with computers is through live coding: he has been live coding for a while, he taught classes on live coding and has organised the first Algoraves in Hamburg and Berlin. He is interested in the performative dimension of writing code on stage, but also in the affordances offered by live (interpreted) coding as a compositional method, as a tool to experiment with sound in real-time. Describing the way he approached the earthquake vest in his work The Intimate Earthquake Archive he says: "These earthquake vests have a little embedded computer in their backpacks that has a system that was completed live coded. The development of it was live coded. I was plugged into the backpack, sending commands in real-time and feeling the difference and understanding what needed to be changed. For me this way of working is the most enjoyable, the most fruitful. And maybe this has something to do with this sort of folk music ways of thinking about creating [1]"

[1] Wordweaving, SignaleSoirée Graz, 26 November 2018

{kind: Biography, function: contextual, persons: JR}

Live coding

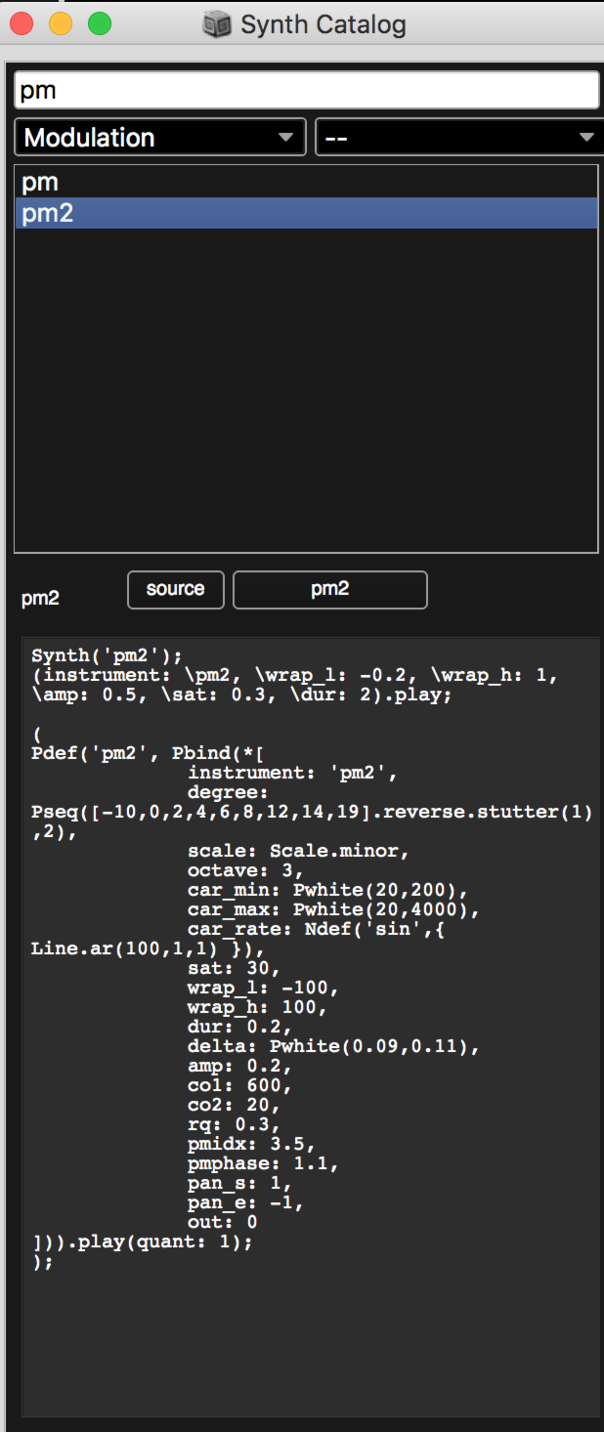

During the Almat residency Jonathan approached live coding from different angles, addressing technical, aesthetical and poetical questions. For example, with the aim of solving some drawback that derives from the ephemerality of live coding – namely that many processes get lost in the flow of writing and rewriting – he developed a SuperCollider library that allows to collect and catalogue synths played during live performances. These synthesis processes are then available for being reused and modified at a later point, filling the gap between live coding and (out of time) composition.

Live coding and story telling

Jon was also interested in incorporating storytelling into live coding performances and experimented with the performative affordances of story telling. "I really like what story telling is, as a performative practice. It's a bit like weaving. Usually there are anecdotes that are sort of woven together, and it's very improvisational [1]".

Voice and language

Working on this idea, he experimented extensively with voice and language, and in particular with voice analysis. After surveying different open source speech recognition packages, he wrote a python frontend / supercollider backend – based on Sphinx – to retrieve speech analysis features in real time. He could then feeding phonemes extracted by the recognition algorithm into SuperCollider and use them to transform parameters of sound synthesis, using his voice to modify sounds in real-time.

Speech recognition

After getting into some limitations of tools like Sphinx, Jon experimented with alternative approaches to speech analysis, and other strategies to deal with his voice. He aims at creating a more complex classifier or feature detector, a tool capable of capturing specific inflections and peculiar vocal utterances, pulling speech apart into its phonetic, sub-phonetic or non-phonetic qualities.

{function: summary, persons: JR}